Select one game of your interest and explain why it has good/bad game UI. Discuss how technology augments human abilities. Reflect upon the implications of the future of HCI.

When I think about the games that became the cornerstone of my understanding of just what a game could be, The Last of Us is always the first one that comes to mind. The UI of the game was almost obnoxiously intuitive, there wasn’t a moment in game that pulled me out of the experience. So how is it that a game that only started focusing on the UI of their game in the last eight months of a four year development ended with an interface so seamlessly intuitive that you barely notice it’s there?

Breaking down the whole UI of The Last of Us is obviously a heavy task, so I’ll focus mostly on the HUD. The HUD felt like an extra limb that I’d spent my whole life learning to use, it stayed out of the way and it never overcomplicated anything. Naughty Dog UI designer for The Last of Us, Alexandria Neonakis, said herself that “the main thing you generally hear people complain about with UI is not how it looks, but that there’s too much cluttering the screen” – and taking that on board she eliminated that clutter almost entirely. Another thing that works beautifully is that the position of elements of the HUD relate to their controlling buttons on the controller, e.g. the weapon selection area is at the bottom right corner and is changed by the d-pad. All of these design features combined make for an intuitive, non-distracting user interface, in which the controller is simply an extension of your hands, merging you together with the game that you’re playing.

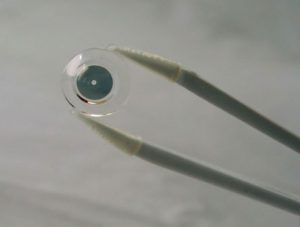

But video game controllers are just the tip of the iceberg when it comes to technology that augments human abilities. Innovega, a small start-up company, have been working on a pair of contact lenses that work in conjunction with glasses to produce its very own Heads Up Display. The technology works by putting a screen on the inside of a pair of glasses, but unlike a lot of VR set-ups that require the screen to be a certain distance from the wearer’s face in order to focus on it, it incorporates a pair of contact lenses which are “outfitted with dual focal planes similar to bifocals, let wearers focus on the screen without its having to be set inches away from their eyes” (Lerman, 2017).

What makes this idea particularly innovative is that the company recognises the issues with current human-augmenting technology and works to make it more intuitive, closer to the human body itself. It seems to me that the further time goes on, the more designers are starting to realise the key to incredible HCI is to make the technology a further extension of the human body, rather than a fresh piece of technology that requires fresh learning of its nuances to properly experience. If it’s possible to do with contact lenses, what other possibilities could there be?

Reference List:

Neonakis, A. (2014) How We Made The Last of Us’ Interface Work So Well, kotaku.com

Lerman, R. (2017) Startup Sees Contact Lenses as the New VR Screens, The Seattle Times

Helpful links:

https://kotaku.com/how-we-made-the-last-of-uss-interface-work-so-well-1571841317

https://www.cnet.com/news/augmented-reality-contact-lenses-to-be-human-ready-at-ces/

http://www.govtech.com/products/Startup-Sees-Contact-Lenses-as-the-New-VR-Screens.html

https://www.theverge.com/2013/1/10/3863550/innovega-augmented-reality-glasses-contacts-hands-on