Uncategorized

ShareNice

July 20, 2011

by Christopher Gutteridge

Executive Summary: data.southampton now has little +Share links which let you share the current page via your favorite social network. ShareNice does not track the pages you visit.

We have added sharenice links to data.southampton (see the +Share link near the top of each page). This is a tool developed by Mischa Tuffield, who studied at Southampton but now works for the firm Garlik.

The reason we have picked this service is partly that it’s useful, sufficient and easy to add, but there’s several social similar services, but this one is run by Mischa, who has made a positive reputations for himself with his concerns over the way tools like this can be used to track visitors. Most notably, his comments on the fact that the NHS could be exposing site visitors to having their page views tracked by Facebook. These comments resulted in an MP asking questions.

Open Linked Catering

July 12, 2011

by Christopher Gutteridge

I’m excited that we’re about to launch the new university catering website, with added linked data features! These features show what the opening hours for points-of-service run by catering, including the halls, and also a product search which lets you search all products from the university (including those from other retailers).

“As a Caterer I am often quoting that ‘I bake bread, I don’t do IT!’ we like to keep it simple and this is exactly what Open data does for us. We can use formats and software we are used to and manage up to date real time information. This will ensure we are keeping customers up to date with information that they want. There is more to come as well and in Catering we have designed our whole web site and marketing strategy around the Open Data technology, watch this space Catering is catching up.”

– James Leeming

University of Southampton Retail Catering Manager

Visit our new catering site at: http://catering.southampton.ac.uk/

Data Catalogue Interoperability Meeting

June 23, 2011

by Christopher Gutteridge

I’ve just come back from a two day meeting one making data catalogs interoperable.

There’s some interesting things going on and CKAN is by far the most established data catalog tool, but it frustrates me that they are basically re-implementing EPrints starting from a different goal. On the flip side, maybe parallel evolution is a natural pheonomena. None the less the CKAN community should pay more attention to the, now mature, repository community.

Data Catalogs have a few quirks. One is that they are really not aimed at the general public. Only a small number of people can actually work with data and this should inform descisions.

The meeting had a notable split in methodologies, but not an acrimonious one. In one camp we have the URIs+RDF approach (which is now my comfort zone) and on the other GUID plus JSON. The concensus was that JSON & RDF are both useful for different approaches. Expressing lossless RDF via JSON just removes the benefits people get from using JSON (easy to parse & understand at a glance).

A Dataset by any other Name

A key issue is that dataset and dataset catalogue are very loaded terms. We agreed, for the purposes of interoperability that a dataset record is something which describes a single set of data, not an aggregation. Each distribution of a dcat:dataset should give access to the whole of the dataset(ish). Specifically this means that a dataset (lower-case d) which is described as the sum of serveral datasets is a slightly different catalog record and may be described as a list of simple dcat:datasets.

Roughly speaking the model of a (abstract, interoperable) data-catalog is

- Catalog

- Dataset (simple kind)

- Distributions (Endpoints, Download URLS, indirect links to the pages you can get the data from or instructions of how to get the data)

- Collections

- Licenses

- Dataset (simple kind)

We agreed that DCAT was pretty close to what was needed, but with a few tweaks. The CKAN guys come from the Open Knowledge Foundation so handling distributions of data which required other kinds of access such as password, license agreement or even “show up to a room with a filing cabinet” where outside their usual scope but will be important for research data catalogues.

We discussed ‘abuse’ of dcat:accessURL – it sometimes gets used very ambiguously when people don’t have better information. The plan is to add dcat:directURL which is the actual resource from which a serialisation or endpoint is available.

Services vs Apps: Services which give machine-friendly access to a dataset, such as SPARQL or an API we agreed were distributions of a dataset, but Applications giving humans access are not.

We agreed that, in addition to dc:identifier. dcat should support a globally unique ID (a string which can by a GUID or a URI or other) which can be used for de-duplication.

Provenance is any issue we skirted around but didn’t come up with a solid recommendation for. It’s important – we agreed that!

Very Simple Protocol

At one point we nearly reinvented OAI-PMH which would be rather pointless. The final draft of the day defines the method to provide interoperable data, and the information to pass but deliberately not the exact encoding as some people want Turtle and some JSON. It should be easy to map from Turtle to JSON but in a lossy way.

A nice design is that it takes a single URL with an optional parameter which the data-catalog can ignore. In other words, the degenerate case is you create the entire response as a catalog.ttl file and put it in a directory! The possible endpoint formats are initially .json, .ttl and (my ideal right now) maybe .ttl.gz

The request returns a description of the catalog and all records. It can be limited to catalog records changed since a date using ?from=DATE but obviously if you do that on a flat file you’ll still get the whole thing.

It can also optionally, for huge sites, include a continuation URL to get the next page of records.

The information returned is the URL to get the metadata for the catalog record (be it license,collection or dataset) in .ttl or .json depending on the endpoint format, last modified time for the catalog record (not the dataset contents), the globally unique ID (or IDs…) of the dataset it describes, and an indication if the record has been removed from the catalog — possibly the removal time.

Harvesters should obey directives from robots.txt

All in all I’m pleased where this is going. It means you can easily implement this with a fill-in-the-blanks approach for smaller catalogs. A validator will be essential, of course, but it’ll be much less painful to implement than OAI-PMH (but less versatile).

csv2rdf4lod

I learned some interesting stuff from John Erickson (from Jim Hendler’s lot). They are following very similar patterns to what I’ve been doing with Grinder (CSV –grinder–> XML –XSLT–> RDF/XML –> Triples )

One idea I’m going to nick is that they capture the event of downloading data from URLs as part of the provenance they store.

One Catalog to Rule them All

The final part of the discussion was about a catalog of all the world’s data catalogues. This is a tool aimed at a smaller group than even data catalogues, but it could be key in decision making and I suggested the people working on it have a look at ROAR: Repository of Open Access Archives which is a catalog of 2200+ repositories. It has been redesigned from the first attempt and captures useful information for understanding the community; like software used, activity of each repository (update frequency), counrty, purpose etc. Much the same will be useful to the data-cat-cat.

Something like http://data-ac-uk.ecs.soton.ac.uk/ (maybe becoming http://data.ac.uk at some point) would be one of the things which would feed this monster.

Conclusion

All in all a great trip, except for the flight back where pilot wasn’t sure if the landing flaps were working so we circled for about an hour and at one point he came out with a torch to have a look at the wings! All was fine and the poor ambulance drivers and firemen had a wasted trip to the airport. Still, better to have them there and not need them!

Jonathan Gray has transfered the notes from the meeting to a wiki.

Points of Service

June 1, 2011

by Christopher Gutteridge

I realised that there wasn’t a big list of all the points of service in our database, so now there is.

http://data.southampton.ac.uk/points-of-service.html

This kind of information may be very useful to freshers!

[April 1st Gag] PDF selected as Interchange Format

April 1, 2011

by Christopher Gutteridge

The following article is our prank for April 1st.

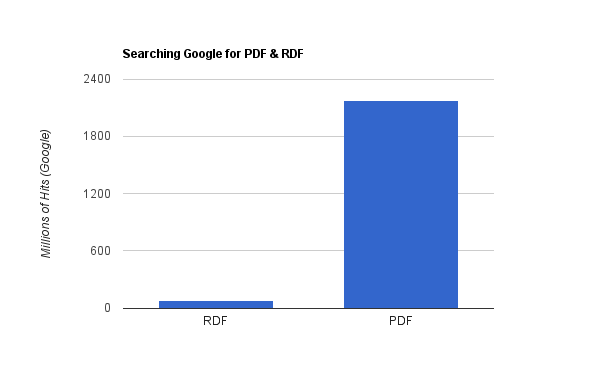

Just to be clear PDF is a dreadful format to exchange data in. It was inspired, in part, by The Register wesbsite running the following picture and quote. Yes, I did say that, but I was talking about research and data communication.

It was fun working out how to make our site output PDF versions of the data, and we’ll leave those as available, but no longer the default. Also, I’ve now linked in the “.svg” format which is basically the same as the PDF.

Hopefully this gave a few people a chuckle.

*** *** ***

We have had many complaints that RDF is complicated, unsupported and makes it difficult to control how people will reuse your data.

With this in mind, we have taken a big decision: PDF (Portable Document Format) has been selected as our preferred format for exchanging data on the data.southampton.ac.uk site.

Many of the data.southampton team felt we should listen to the pro-PDF comments on the forum for the recent Register Article about Open Data in Southampton.

Henceforth, the preferred method for both importing and exporting data from the site will be PDF. We will continue to provide other formats such as CSV & XML for the time being, but with a clear goal of removing these options as soon as is practical.

From May 1st onward we will only accept and export data in PDF and HTML formats. This allows us much more control and flexibility over how our data is presented. Data providers will be able to supply the Southampton OpenData team with data via PDF documents, or as printouts that we can scan and convert to PDF, and we will know exactly how to deal with it. To make things even easier, people will even be able to use the networked scanners anywhere on campus to directly upload data. Data providers at remote sites will be able to fax their data in.

Extending 4store

For now, we will be continuing to use 4store as our database server, but we have significantly improved on the default interface by adding a “PDF” output mode which users will find familiar.

Examples:

- PDF query for a list of University Buildings

- PDF query for a list of Programmes taught at the University

Our extension will be made available, on request, under an open source license.

PDF Descriptions of Resources

Many of the resources in the site will now be available to download as PDF in addition to HTML, just by changing “.html” to “.pdf”. Look out for the “Get the data!” box on many pages which will offer a link to the PDF format.

- Module described in PDF

- Where to buy booze (popular with some students!)

Real-time PDF data!

The most valuable data of all is accurate and up to date, and we are now able to do this in a way you’ve never seen before! We’ve already created an HTML page for every bus-stop in the city, but that’s only in HTML format, which is well known to be inferior to PDF.

Imagine you’re at a bus-stop and want to know when the next bus is, now all you need to do is download the following link into your phone and view it in the mobile PDF viewer of your choice, and hey-presto! – realtime bus data direct to you on your handset!

Positive Reactions

So far all the feedback we have had has been massively positive. One user of data.southampton said

“I’m so glad they have done this, and it’s easy to switch too, all I needed to do was change a “R” to a “P” – simples!”

Professor Nigel Shadbolt and Professor Sir Tim Berners-Lee were unavailable to comment as they are currently at the WWW2011 Conference, but we are confident they will have a very strong reaction when they hear about the decision.

New Formats

March 25, 2011

by Christopher Gutteridge

New ways to enjoy our data.

We’ve added some links to the “Get the Data” box which let you see what formats are available. Some pages let you download RDF, others you can get back as tabular data, suitable for loading into Excel, amongst other things. Roughly speaking, pages about things have RDF versions, pages about lists of things (places, buildings etc) have a tabular download available.

eg.

Improvements to the Embedable Map Tool

March 13, 2011

by Christopher Gutteridge

I’ve added an option for ‘terrain’ instead of map/satellite. This only works when a bit more zoomed out than the other views.

More importantly, I’ve added numbered placemarkers. This only works for buildings with a simple one or two digit number. If it ever becomes massively popular we’ll build a custom placemark generator.

View an example: Full Screen

More RDF

March 11, 2011

by Christopher Gutteridge

I’ve improved the back-end tools which provide RDF when you request a .rdf or .ttl file. By default the system just gives an facts which have the current resourece as the start of the fact. This sort of sucks as when looking at a building it’ll tell you BUILDING-X is within SITE-Y and BUILDING-X is called “The building of advanced science stuff”. What it won’t do is give any that go backwards, eg. if I know ROOM-Z is within BUILDING-X, it won’t mention that by default.

So I’ve mae a way to make it relatively easy to add this information. I can also tell it to follow several hops to find all the useful information. The art is going to be, for each class of item in our system, working out the balance between utilitity and brevity. The very simple rule of thumb is to get all the information you need to display an HTML page about that thing.

And that example leads to another point, I really need to give an example of every type of data item under the hood. This site is all iceberg-like right now. Only I know for sure what lurks in the SPARQL… I’ll get to it, I promise.

Friends, Romans, Countrymen…

…send me your data. But maybe don’t hurry, as I’ve got a back-log already! Yesterday I got an email about some data we have a legal obligation to publish… am I the right person? I guess I am! But it’s not my only responsibility and I had to put all my other work on the back-burner to get this site up, so things will now move slower but always forward. Maybe a little sideways.

What I won’t except are things which we have no hope in hell of keeping up to date, so we only really want data which is already someones job to maintain. I’ve made a couple of exceptions, most notable that the building position and footprint data is created by volunteers — however this data moves very slowly. We’ll learn what works as we go, this has never been done before!

Research data is a world of complicated and awesome all by itself. We’ll never add it to this site. It will want a very different form of collection and curation. If there’s research data that you want to publish right now, and it’s not crazy big, I recommend you put it in eprints.soton.ac.uk – this will give it metadata, a license and a permenent home on the university web.

RDF Bus Stop Data

March 10, 2011

by Christopher Gutteridge

The individual Bus Stop data is now available as RDF…

http://data.southampton.ac.uk/bus-stop/SNA19777.rdf

That took way longer than I expected to set up!

A quick celebration and back to work

March 8, 2011

by Christopher Gutteridge

The site has been open to the public for 12 hours and in that time has logged 125411 hits, but due to the nature of the design, asking for an HTML page can generate several secondary requests to the site for data from the server itself, so that number should probably be divided by 5 or 10. Yes, we use our own public data sources to build the HTML pages, not a ‘back channel’ to the data. Keeps us honest, and able to catch issues quickly.

We’ve had hits from about 1300 distinct IP addresses since noon.

At 5:30 we had a meet up of some of those who’ve been involved in some way. We had some rather nice champagne, kindly given to us to congratulate us by Chris Phillips at IBM. In true engineering style we drank it from coffee mugs, which is almost exactly the same way we celebrated the release of EPrints 2.0 almost a decade ago.