There’s a few phases in the life cycle of a website. Some we do better than others.

The website can go offline or be erased for good at any point. In my lifecycle websites often skip forward to later phases but very rarely step back to earlier ones.

Phases 3 & 5 rarely happen, but I think they are things we need to encourage in the future. Sorry about the rather morbid titles, I’m open to more cheerful alternatives.

- Go live

- Actively maintained

- Embalmed for preservation

- Fossilisation

- Placed in a repository

- Interred

- Cremated

Phase 1: Go live

Before it’s visible to the public, most websites spend a few days, weeks or months in an inchoate state. Some websites never actually go live as the need for them goes away, or they turn out to require more work than anticipated.

Before it’s visible to the public, most websites spend a few days, weeks or months in an inchoate state. Some websites never actually go live as the need for them goes away, or they turn out to require more work than anticipated.

Phase 2: Actively maintained

This is the time when the owners of the site care about it and keep it updated. The end of this phase can be very clear or interest wanes.

This is the time when the owners of the site care about it and keep it updated. The end of this phase can be very clear or interest wanes.

For research project websites people’s funding ends on a date, and few people want to put much effort in after this date. For conferences and other events, the actively maintained phase ends at the end of the event or soon after.

Many sites should be formally retired at the end of this phase, but people keep them around, just because.

Phase 3: Embalming

Why would a website need to exist past the period it’s actively maintained? Often it shouldn’t and the lifecycle should proceed rapidly to the “interred” and “cremated” phases, but there’s plenty of good reasons to keep some sites around:

- Many research funders make a requirement that websites for a project should stick around for 3, 5 or 10 years after the end of a project.

- Some sites provide a public record that an event or project happened, what the outcomes were and who was involved. For major events and projects, this may be the source-of-truth for Wikipedia, historians, and future researchers.

- Extending the previous point, discovering past work can lead researchers to new research contacts, collaborations and other opportunities.

- Finally, and the most important one: As a university, our job is to increase and spread knowledge. The cream of our websites do this in large and small ways. A simple example is the “Music in the Second Empire Theatre” website I just worked on. You can read my blog post about it. The information on this website could be valuable to music researchers centuries from now.

Appropriate steps for the preservation of a website can prevent it going rotten later on.

Something I’ve given a lot of thought to lately is how to reduce the long term support costs of interactive research outputs. Right now we’re having an incident every two weeks or so where some ancient VM goes to 100% CPU and it’s hard to resolve as it’s the research-output of someone who’s left or retired, and still of value, but maybe not worth the cost if it’s causing lots of work to keep up and running.

Old systems like that are also a bit of a horror story we tell young information security specialists. They can also be a, er, challenge to GDPR audit and secure against hackers.

Some sites and services should be preserved indefinitely but to do that they need to have no back-end dynamic code and be easy to shift to a new URL as really long term preservation will probably mean moving into some kind of repository.

When a site is at the end of it’s “active” life we need the site owner to decide if it’s going to be shut off or preserved. If it’s to be preserved then we should be expecting the site owner to do some work to prepare it. This is where we really drop the ball — we ned to make this chore understood and accepted as the price of having your website preserved beyond it’s “actively maintained” phase.

For conferences, removing irrelevant information and removing future tense where it looks silly.

For research projects this would be to remove any private areas (not an issue for more recent projects which tend to use services for sharing files and conversation rather than .htaccess protected directories and wikis). Research projects also need to remove any placeholder pages for planned information which will never be created, and link to outcomes and publications.

This step is a big challenge as it’s boring and unrewarding for the people who need to do it. Often the end of the project is either a race to hit deliverables or a time when it’s hard to care as you’re about to lose your job. After a big event like a conference, it can be hard to find the energy to tidy up the website for posterity.

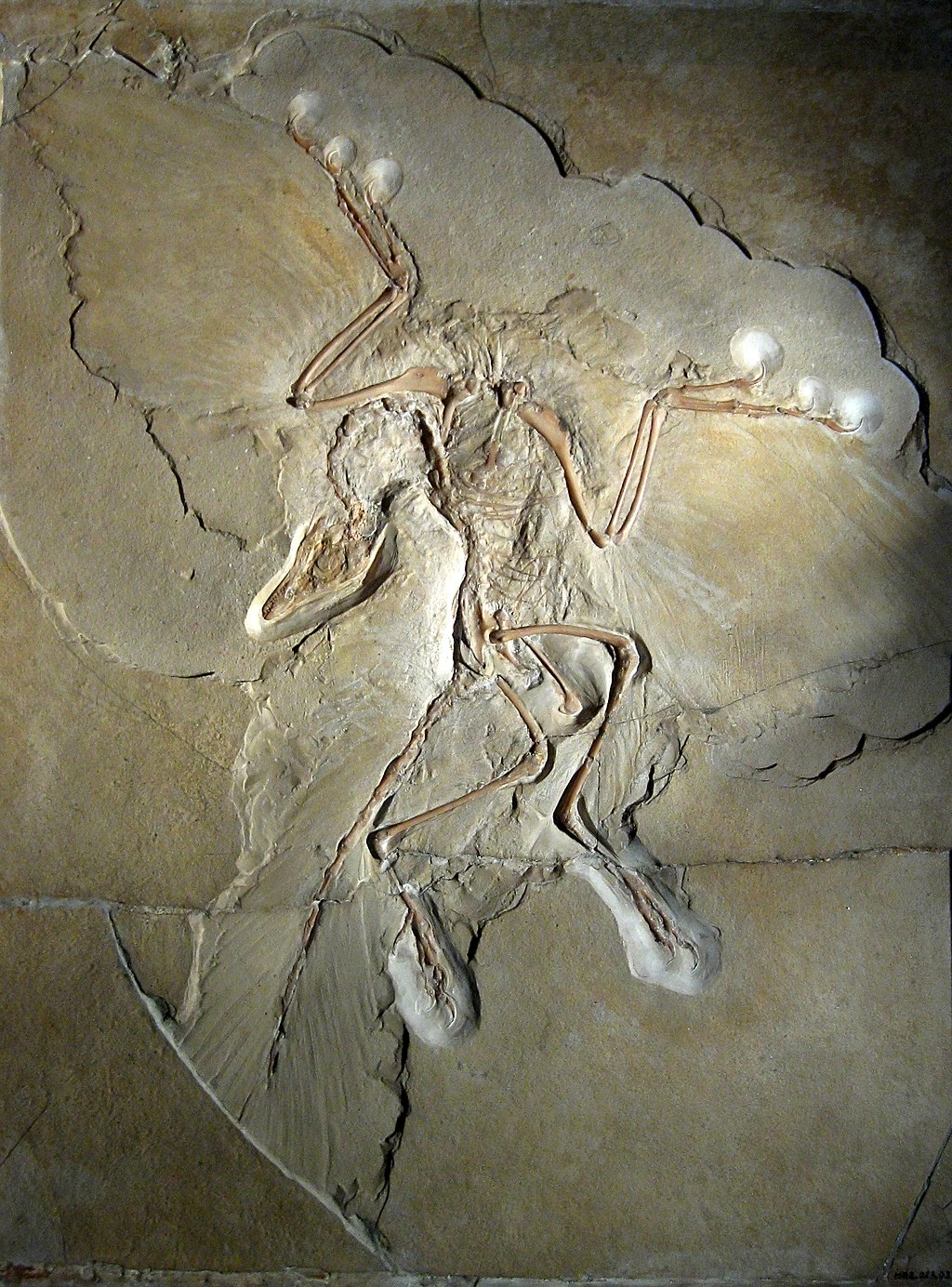

Phase 4: Fossilisation

For dynamic websites, and blogs, using things like node.js, .net or php, this would mean turning the site to static files. Static .html files are at virtually no risk of being exploited by hackers and much less risk of breaking when a server has to be upgraded to a new version of PHP (or whatever back end you use), so making long term support much lower cost.

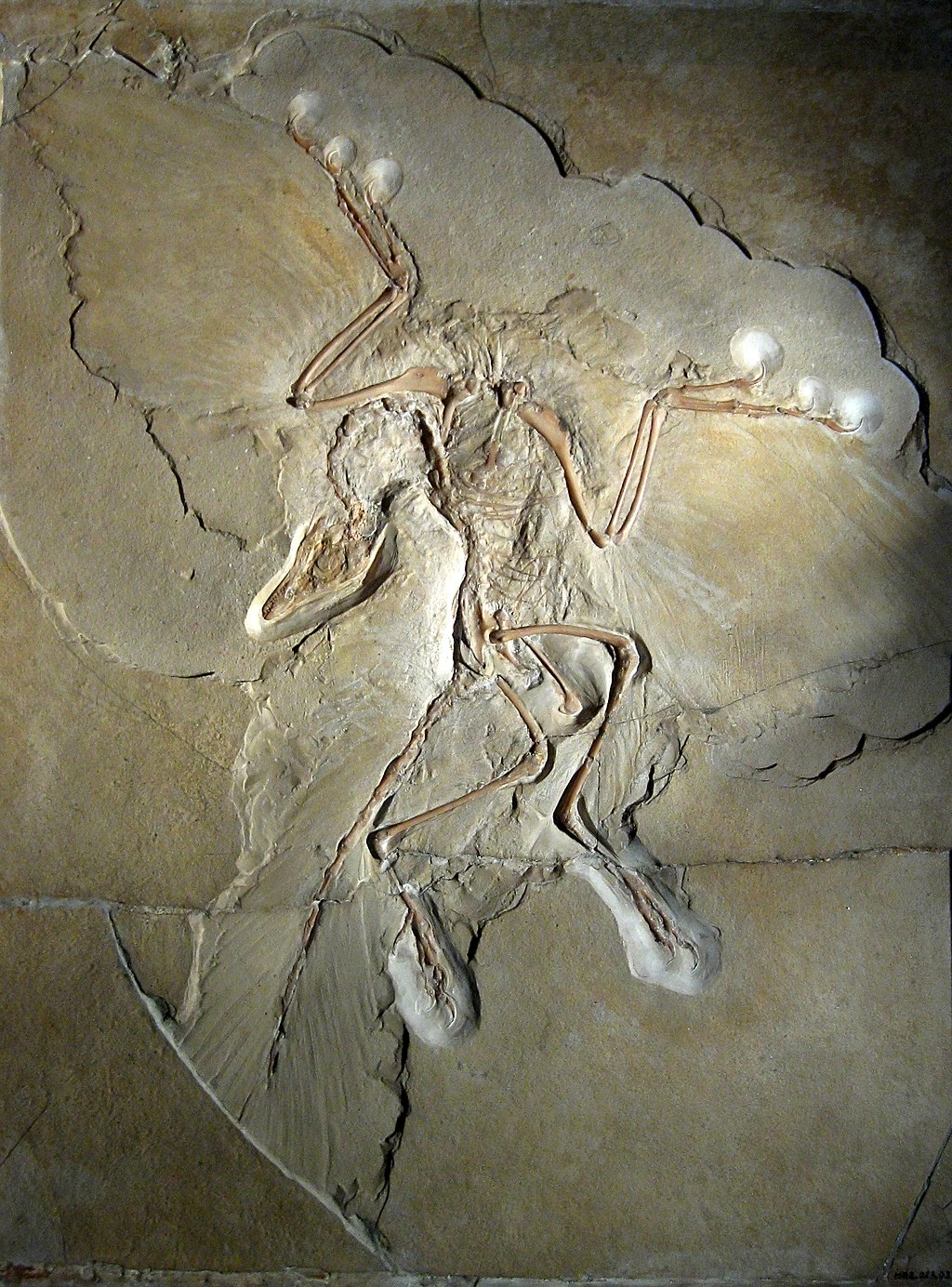

I like to think of this process as fossilisation. The new site is the same “shape” as the site you copied, but it’s lifeless and rock solid and should last a very long time.

I like to think of this process as fossilisation. The new site is the same “shape” as the site you copied, but it’s lifeless and rock solid and should last a very long time.

The tool “wget” (as seen in The Social Network) is great for turning websites into fossilised versions. It can even rewrite the links to pages, images, javascript etc. to be relative links so they work on a new web location, or even a local filesystem. The one thing it can’t do is edit the filenames inside javascript code, so really fancy stuff may require some manual intervention after the site is crawled.

When serving a fossilised site, the MIME type of each file is not recorded, and the new webserver will guess the MIME type based on filename. This can be a bother if you had something odd going on, like PDF files that didn’t end in “.pdf”. A more long term solution is to save the website as a single huge WARC file. I’ll explain more about that in the repository phase, but the important thing to know here is that it stores the retrieved MIME type with each file, and wget will generate such a file.

One tip, from embarrassing experience: before creating such a “fossilised” site, make sure that hackers haven’t already done anything to the site! I was responsible for a website for a major conference, which had a very naive “upload your slides” PHP function. Many years after I turned it all to static files, we discovered some cheeky person had uploaded some spam documents amidst the valid content! So a check for stuff like that is recommended, especially for old wikis.

For sites at this stage I have started using apache to inject a <script> tag which

Phase 5: Placed in a repository

The very long preservation of things isn’t really a job for the IT or Comms department. This is a job for librarians and archivists. While this is currently rare, in future more websites may be preserved in institutional repositories, like EPrints, D-Space, PURE etc.

These repositories may or may not make the deposited website visible to the general public. The long term value of preserving the information then becomes a decision for people with appropriate training. Some sites may be important to preserve for future generations, and which ones may be hard to decide, but the IT department can help by making such preservation cheaper and lower risk.

These repositories may or may not make the deposited website visible to the general public. The long term value of preserving the information then becomes a decision for people with appropriate training. Some sites may be important to preserve for future generations, and which ones may be hard to decide, but the IT department can help by making such preservation cheaper and lower risk.

Such preservation could be of any combination of the original site, fossilised site files, or a WARC file. I’m hoping that, in future, like EPrints might start accepting a .warc format file and serve it a bit like the Wayback Machine does. This seems a good idea for when websites can no longer stay on their old URLs due to domains expiring without budget to renew, and hosting servers reaching end of life.

Phase 6: Interred

People can be very nervous about destroying data so it may be useful to offer to take a site offline, but keep the data, and place a useful message at it’s previous location for a period of time. Say 6 months or 12 months. At the end of this time, if nobody has actually needed the content it gets destroyed.

People can be very nervous about destroying data so it may be useful to offer to take a site offline, but keep the data, and place a useful message at it’s previous location for a period of time. Say 6 months or 12 months. At the end of this time, if nobody has actually needed the content it gets destroyed.

A word of caution; I’m often asked by academics for a copy of a soon-to-be-erased website, just so they know they still have a copy if they need it. That’s usually fine, but might be inappropriate if the site contains data

Phase 7: Cremated

I like the metaphor, even if it’s a bit morbid. There is a point where a website is gone and we have destroyed all copies of it under our control. This sounds a bit dramatic, and it is. We want people to understand that after this point it’s gone and they can’t get it back. This step is most important on sites with a data protection issue.

I like the metaphor, even if it’s a bit morbid. There is a point where a website is gone and we have destroyed all copies of it under our control. This sounds a bit dramatic, and it is. We want people to understand that after this point it’s gone and they can’t get it back. This step is most important on sites with a data protection issue.

Epilogue

I’ve been working on this workflow based on a mix of what we do and what we should do. This is a task from my Lean Six Sigma Yellowbelt project, for which the problem statement was “we don’t turn off websites when we should”.

From having put some thought into it, and lots of conversations with people, it seems to me that where we need to put the effort in is to ensure that we can identify when the site is soon ending it’s “actively maintained” phase and have the site owner either elect to, at a certain date, have it taken offline and destroyed automatically 6 months later, or to declare that they want it preserved, in which case both they and we (IT) will need to take steps to ensure it’s in a suitable shape.

For most sites that will be a cleanup and then turning to static files. For a few oddball sites, like ones running research code, we need to really think about hard about how to sustain it. Old research websites running unmaintained code to provide cool demos and services seem to be a cause of at least two “Old VM at 100% CPU” incidents this month!

My plan is to turn this lifecycle into a document a bit more formal than this blog post so that it can become a first version of our official process, and sent out to website owners to explain both what we can do for them and their responsibilities if they want us to support them.

How can it be improved, simplified, extended? How on earth do we get academics to do the step to prepare sites for preservation when they’ve already got 50 other little jobs?

Before it’s visible to the public, most websites spend a few days, weeks or months in an inchoate state. Some websites never actually go live as the need for them goes away, or they turn out to require more work than anticipated.

Before it’s visible to the public, most websites spend a few days, weeks or months in an inchoate state. Some websites never actually go live as the need for them goes away, or they turn out to require more work than anticipated.

I like to think of this process as fossilisation. The new site is the same “shape” as the site you copied, but it’s lifeless and rock solid and should last a very long time.

I like to think of this process as fossilisation. The new site is the same “shape” as the site you copied, but it’s lifeless and rock solid and should last a very long time. These repositories may or may not make the deposited website visible to the general public. The long term value of preserving the information then becomes a decision for people with appropriate training. Some sites may be important to preserve for future generations, and which ones may be hard to decide, but the IT department can help by making such preservation cheaper and lower risk.

These repositories may or may not make the deposited website visible to the general public. The long term value of preserving the information then becomes a decision for people with appropriate training. Some sites may be important to preserve for future generations, and which ones may be hard to decide, but the IT department can help by making such preservation cheaper and lower risk. People can be very nervous about destroying data so it may be useful to offer to take a site offline, but keep the data, and place a useful message at it’s previous location for a period of time. Say 6 months or 12 months. At the end of this time, if nobody has actually needed the content it gets destroyed.

People can be very nervous about destroying data so it may be useful to offer to take a site offline, but keep the data, and place a useful message at it’s previous location for a period of time. Say 6 months or 12 months. At the end of this time, if nobody has actually needed the content it gets destroyed. I like the metaphor, even if it’s a bit morbid. There is a point where a website is gone and we have destroyed all copies of it under our control. This sounds a bit dramatic, and it is. We want people to understand that after this point it’s gone and they can’t get it back. This step is most important on sites with a data protection issue.

I like the metaphor, even if it’s a bit morbid. There is a point where a website is gone and we have destroyed all copies of it under our control. This sounds a bit dramatic, and it is. We want people to understand that after this point it’s gone and they can’t get it back. This step is most important on sites with a data protection issue.