Data Ideas

Oooo, data

May 27, 2011

by Christopher Gutteridge

On Wednesday I gave a well-recieved talk to the university ‘Digital Economy’ research group (a virutal group containing people from all over the university).

Yesterday I had the fun problem of lots of people getting in touch with ideas! For the next couple of months I still can’t put my full focus on the Open Data, but here’s some of the interesting things going on behind the scenes:

- Facilities / Equipment dataset to describe our cool toys. I’ve got people interesting in contributing to this from all over the university. You can see a preview here. The idea is to help the left hand know what resources the right hand has, and who’s allowed to use them. I’ve had provisional interest in this from medical imaging, the high voltage lab, the nano cleanrooms, archaeology, civil engineering and chemistry.

- Disabled Go reports – someone pointed me at this site which has detailed reports on disabled access for 98 of our buildngs. Most of the data is too detailed to map into RDF, but what I was hoping to do is (1) just provide a link to the reports for each building from our data and /building/ pages. That alone gets far more value out of it and maybe (2) pull out the headline data, eg “has disabled loo”, “allows guidedogs”. We’ve been in touch with them and it sounds like they are pretty postitive about the idea. I still need their permission to provide that information under OGL or another open license.

- Catering have updated all the menus to include coffee & other hot drinks (it was missing before), after noticing the the opendatamap didn’t have any results for searching for ‘coffee’ (the horror). Problem is, the menu says “Filter (Large)” now so still no match for coffee! We’ll either rename it to “Filter Coffee (Large)” or consider adding a “Hidden Labels” field to help searches.

I got asked what the success criteria for the Open Data project was. This is very difficult to define but for me it will be when the open-data-service is so much part of business-as-usual that people on longer want an enthusiastic hacker running it! I’m looking forward to talking about the good ‘ole days when open data was a new frontier and nobody even had an ontology for coffee types or bus timetables yet.

The Open Data is starting to get put to use to:

- People are using the bus times pages (I need to make the interface better, I know!)

- Our upcoming campus mobile phone app will use some of the location data

- I’ve been asked how the service could aid with student induction– eg. help people find what’s available, and where it is.

The other thing ticking along is getting live hookups to databases. Right now it’s all done with one-off dumps, we want to be showing the living data. The dump-and-email approach is fine for getting started but now it’s time to do the far less glamorous job of making the back-end more automated. I’m still working on getting energy use data per building, and I’ve a lead on recycling data!

Good times.

One final thing, you may notice that the Open Data Map is now not quite as pretty, there’s a good reason for this. We noticed that we may not own data traced using the Google Maps, so Colin has re-created all the data from the ordnance survey instead. There is slightly less detail, but the functionality is all still there.

The slides from my talk are available on EdShare. I’ve never uploaded to EdShare before — they’ve done a really great job at making a streamlined submit process. It’s far better than anything I’ve used in EPrints before, and I say this as the person who designed the EPrints 3.0 submit workflow!

Back to Reality (almost)

March 9, 2011

by Christopher Gutteridge

We’re spending this week catching up on little jobs we put on hold to get data.southampton ready on time, but there’s people who keep offering me data!

Unilink Happy

The Unilink office have offered to take over looking after the bike shed location data, as they look after that service. That’s great! Our goal is for the ColinSourced data to be slowly handed over to parts of the university administration, with his dataset left to be the odds and ends which nobody is specifically responsible for. We’ve also been discussing how to advertise the bus-times related features to students and Unilink users. I don’t want to dive in with both feet for a week or two, in case there’s issues that’ve not come to light yet. I’m dead proud of the fact that their receptionist told her boss “yes, I can look after that data, it’s just a google spreadsheet, it’s easy!”. That’s our goal!

Muster Points

I got a great suggestion from Mike, our facilities manager, that we could add public safety information about buildings. We don’t need every detail of every fire escape (there’s signs in buildings for that), but we could usefully add a list of first aiders and a map polygon of the muster point (which we can render on the building page). We’ve created a mini dataset for the ECS buildings muster points, but I’ve not yet had time to import it into the site.

Google data a bit Shonky

My contact for university buildings data pointed out (rightly) that we had the incorrect location on the Highfield Site page for a few things. That’s not my data!! It’s the labels added by Google based on whatever they’ve found on the web. In this case the data would be accurate enough if planning how to drive to the Gallery, but useless if you are plotting a site map.

I fixed it by just using “t=k” instead of “t=h” which turns off the labels from Google.

Of course the best way to get better data into Google is to publish it on the web! Anybody got any advice on how to get Google to read the locations of our stuff?

How does it all work, then?

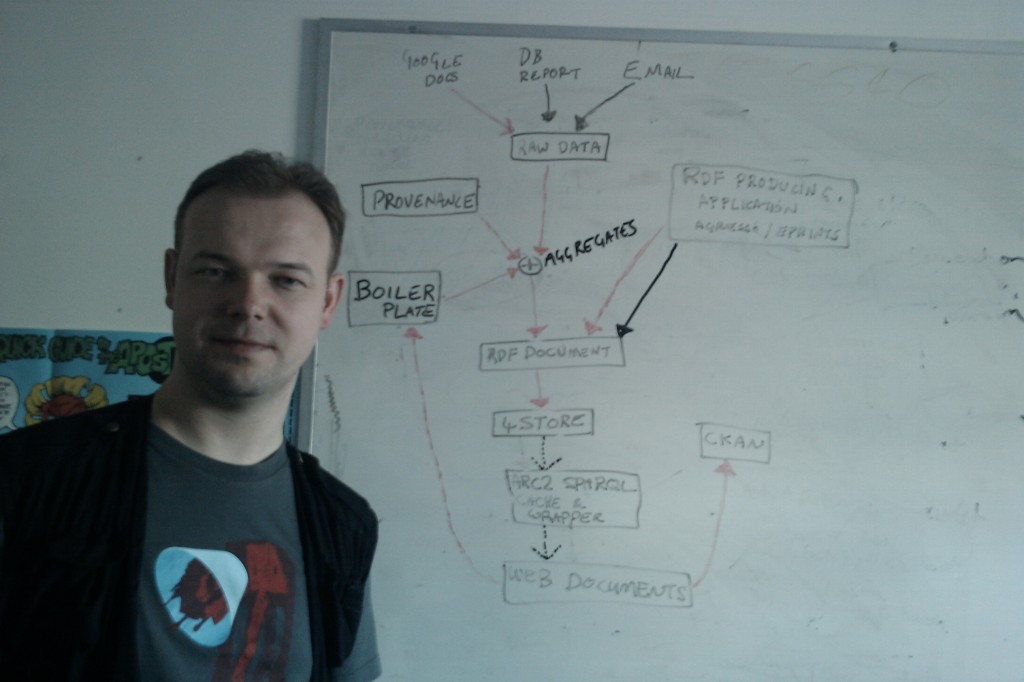

I’ll write on the Web Team Blog, sometime soon, an explanation of how the systems on the data site work, but for now here’s the diagram.

The first thing you’ll notice about this diagram is that I’ve had a hair cut. I really hated how I look in the last picture I posted here and was sick of trying to look after it. Fresh new look for Spring!

OK, the diagram has 3 colours of arrows but I couldn’t find 3 pens so the dotted arrows at the bottom are the 3rd colour. Doing more with less!

The black arrows represent manual processes like people emailing me data and me copying into the correct directory.

The red arrows are all the stuff which happen when I run “publish dataset” (warning Perl!).

The dotted lines are triggered when a request is made to a URL. It’s not very complicated and the script does most of the heavy lifting.

The long term goal is for something to download copies of spreadsheets (etc.) once an hour and MD5 them. If the checksum has changed since the last publication date, then it’ll republish automatically. That way I can leave it polling the spreadsheet describing the location of our cycle sheds, and if it ever changes it’ll republish it without bothering me. I work hard to get to be lazy later!

Dave Challis would like to point out that I’ve skipped all his clever stuff around the 4store and ARC section so he’ll write a post later to explain that in more detail.

Recognition Back Home

There’s been lots of tweets, but few blog posts about this site so far, but it’s nice to be mentioned on the hyper-local blog from my home town!