A few weeks ago I went to an unconference called “redecentralize the web“. It was a two day event but I decided to go for just the Saturday as I needed at least some weekend downtime.

The venue was excellent. It was hosted at the ThoughtWorks office in SoHo, London. Working wifi, nearly enough coffee, very good food (in 2015 geeks eat kale).

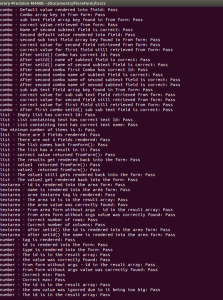

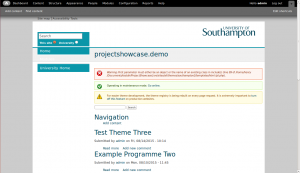

My main goal for the day was to talk about the work we’ve done around the autodiscoverable Organisation Profile Documents. It’s a good thing to be talking about as we can actually show it in action, rather than talk about it in theory.

That leads me to my main frustrating with much of the discussion. Much of the work seemed to be building infrastructure designs which had no value beyond being decentralised. There were at least three distinct people who had a solution for a low-cost home server to run email and other services. The problem is that it’s not a product a normal email user would consider using — why give up the value gmail gives you? There are some really good arguments for decentralisation of systems other than the privacy one, and those are what the community will need to focus on if they want the cause to be taken up by normal users.

Inter-Net Curtains

I had a challenging conversation over a coffee with a chap that pointed out that it’s very hard to argue for privacy as people don’t really care and worse, the people who need privacy in our culture are generally not people you want to be sticking up for; terrorists, paedophiles and drug dealers. However there are many places in the world where people are likely to be persecuted for political activities, homosexuality, religion and even race.

This got me thinking about what the “normal” people I know feel about privacy and they care far more about their net curtains stopping their neighbours peeking in than they do about the police monitoring their email metadata without enough safeguards against inappropriate actions (judicial review seems appropriate before spying on people).

There’s no way such an issue can really capture the public interest without tabloid backing, and that needs a bad pun to get the idea over. So I give you: “Allow the British the right to (inter) net curtains!” (it probably still needs work).

Redecentralize the News

I went to a discussion session hosted by the now legendary Bill Thompson who got people talking about the changes to news that the web has triggered. It was depressing listening to the experts (people who knew more than me, anyhow) talk about the fact that newspaper’s supporting services are now done by things like gumtree and E-harmony so that the news is more expected to pay for itself and that lead to clickbait. Someone said that young reporters are less likely to do the ‘court circuit’ and other early career learning experiences, and more likely to just rely on press releases so the value of the local press holding local councils to account is disappearing.

The only solution I can think for this is a Flattr style approach so that stories got income based on people valuing them rather than clicking them and triggering advertising revenue.

Some disheartening quotes from the session:

“Journalists have to learn to make money first and can learn about doing it right later”

“There are more government funded press officers in Northern Ireland than there are journalists”

“Now is a very good time to be a corrupt local official.”

Side note; SotonTab selling out.

As a side note, the Southampton student indepenentent news service called “SotonTab” which started with as a mixed bag of useful articles and tosh has recently joined (sold out to) the national TheTab.com. I wish that they had not.

Apparently student authors logging into the site are now presented with the guidance “news is what people click on”.

That’s the next generation of journalists not even starting out with any lofty ideals.

SotonTab did some good work last year presenting the pro and con cases around the controversial conference about Israel that the univesity was going to host, and have written some satisfyingly rude articles about iSolutions (sometimes we do suck, and we deserve to be called on it by the students) but I have no hopes for their future now they are part of a national organisation which worships at the altar of the click. Sigh.

If you build it, will they come?

Sadly, it’s a belief of many technical people that if you build something “better” then people will start using it, but that’s not what makes people shift to a new service. Humans are tricky things.

This event was full of lovely people mixing from the young and idealistic to the not-so-young and a bit more cynical. What techies who are about redecentralisation need to focus on is the social factors that will bring this about, not inventing distributed solutions for services which people are perfectly satisfied with. People need a reason to switch off centralised services that is more than an abstract fear of government snooping.

I’m not sure what that would be, but that’s the big question for this community:

What can decentralisation offer individuals that provides them with a better experience than today’s centralised services?