A couple of days before setting off for dev8D, I set a script running to log the changes in friends/followers of twitter accounts related to dev8D.

In looking at the data, I’ll talk about three sets of twitter users:

- Wiki users – this is anyone who has registered on the dev8D wiki with a twitter account. Anyone is this category is assumed to have been an attendee at dev8D.

- Dev8D community users – this is anyone who has mentioned ‘dev8D’ in a twitter post or is a wiki user

- Total users – someone who has been followed by or follows someone in the two categories above

So far I’ve just looked at the smallest possible category: those who registered on the dev8D wiki with their twitter accounts, numbering 113 in total.

I captured 9 days of useful data, from 22nd Feb 2010 to 3rd March 2010.

The Numbers

In summary:

Those 113 attendees followed:

- 158 other attendees (i.e. wiki users)

- 250 dev8D community users (including wiki users)

- 565 total users (including wiki/community)

and were followed by:

- 73 other attendees (i.e. wiki users)

- 149 dev8D community users (including wiki users)

- 644 total users (including wiki/community)

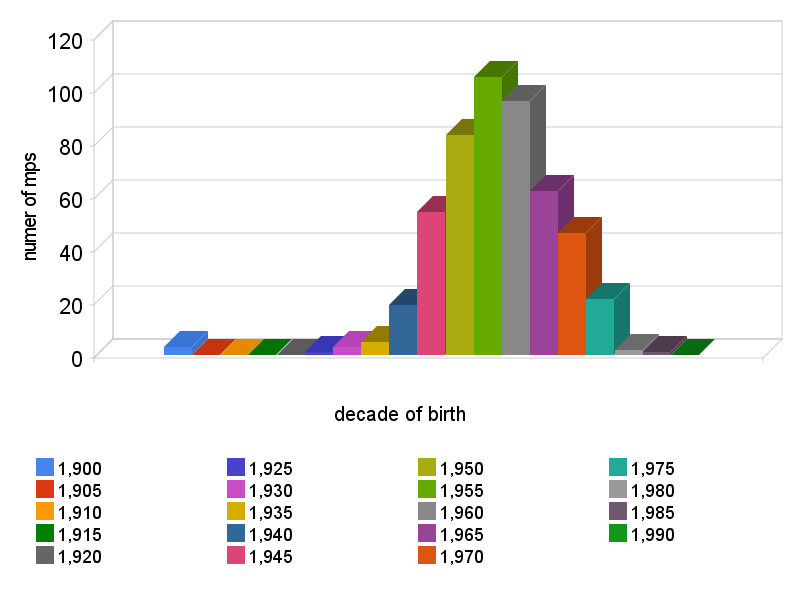

Putting those figures into bar charts:

Source Data

Raw data is available in a sqlite3 database at:

dev8d_twitter_network_2010-03-03.db (or view one of the images above, and get the data passed into the google charts query string)

Observations and Comments

Data oddities:

- There’s some discrepancy between wiki follows and wiki followed by numbers, the higher of the two is actually the true value. This is due to the fact that I’m only looking at diffs in a person’s network, i.e. connections between dev8D attendees that were made before the event or before signing up to the wiki are ignored.

- The numbers for 2010-02-22 are higher than expected, due to the fact that data collection failed for the two days preceding it, causing all the figures to be lumped together (dividing that day’s numbers by 3 would give a more realistic estimate of changes for that day). I’m not sure why data collection failed, network problems? PC crash? Temporary twitter rate limit ban? Script bug?

On average, each and every dev8d attendee on twitter gained 6 followers over dev8D (and a couple of days surrounding it). I’d be interesting in seeing how this compares to the average over the rest of the year.

Also of interest, is that fact that dev8D was attended by 200 or so people per day (450 on the first day, which included a linked data meetup), but was mentioned by around 500 different people on twitter. Hopefully this is some evidence that news/outcomes/interest from the event reaches far beyond its immediate participants.

Next step is to look at using GraphViz to produce some diagrams of the changes in network over time. Suggestions on visualisation for this are more than welcome – my network diagrams so far look like squashed spiders…

(continued in my next post)