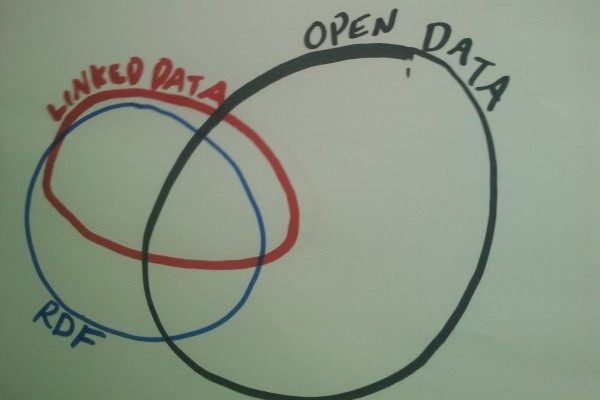

I’ve had a request to write a post on “What is the Semantic Web”… so here goes. This is a personal persepective, but if people point out glaring or dangerous errors, I may update the article. I’m not going to allow comments which will inflame the usual debates, I’m trying to write a friendly summary for people how are interested.

Executive Summary

The Semantic Web allows software to find out facts about the structure of data. A data file on the web says “Chris Gutteridge” is an employee of the university of Southampton. A computer uses Linked Data to discover facts about the identifier for the relationship “is employee of” and uses these facts to reason that Chris Gutteridge must be a person, and the University of Southampton is an organisation, even though these facts were not in the initial data document. Now imagine that scaled up to the whole Web!

URIs are Awesome

URIs are globally unique identifiers which identify, er, something. A subset of URIs are URLs which locate something on the web. There’s also a thing called IRIs which allow non-ascii characters, but don’t worry about them for the purposes of this explanation.

Why are URIs awesome?

Well, the instant value is that you can confidently generate unique identifiers, by generating them as web-like addresses in a domain that you own. This is just a convention, but a damn elegant one.

However if that’s all that URIs were then UUIDs would do very nearly as well, or better in some cases.

So URIs can do something UUIDs can’t?

In my previous post I explained that Linked Data is when you have links from one dataset to another. There’s a sort of degenerate version of this, which makes linked data people sad, but is still dead useful. That’s when you just use URIs as globally-unique identifiers, but don’t make them resolvable on the web to more data. If you define them in a website you own, then you always have the option of making them resolveable at some later date.

The idea of discovering more useful data by resolving a URI which identifies something is really neat, but where it gets confusing is that you can’t have an identifier which identifies both “Building 59 at the University of Southampton” and some “30K HTML document on the web”. One of these has a size measured in cubic meters, the other in bytes. So what we do is we make the identifier for the thing different from the one for the document. The really simple way to do it is like this:

http://users.ecs.soton.ac.uk/foaf.rdf#me — the URI for me

http://users.ecs.soton.ac.uk/foaf.rdf — the document describing me

When you resolve a URI with a # in (or a fragment identifier if you want to sound clever), you get the content from the web address without the ‘#’ bit. This is good as far as it goes, but can only return a single file format for the concept and is kind of ugly. There’s a much more neato way to do this but it requires a bit more webmastering…

HTTP 303 See Other Redirects

(This is sometimes called “HTTP Range-14”, generally when people are arguing about it, which seems to be an ancient and holy tradition of semantic web mailing lists)

The clever way to get from the real-world-thing-URI to the data-document-URI is to use the HTTP return code 303. Now, you might have already run into 301 and 302, which mean a resource has moved temporarily or permentantly. You can see them in action when you type in a web address and your browser changes it to the ‘official’ location. For example, if you visit http://data.soton.ac.uk you’ll be redirected to http://data.southampton.ac.uk/. You can look under the hood on a linux or OSX machine by going to the command line and typing:

curl -I http://data.soton.ac.uk

The -I means show me the HTTP headers. You should get something like..

HTTP/1.1 302 Found

Date: Fri, 22 Jul 2011 08:39:49 GMT

Server: Apache/2.2.14 (Ubuntu)

Location: http://data.southampton.ac.uk/

Vary: Accept-Encoding

Content-Type: text/html; charset=iso-8859-1

This tells the web browser to redirect to the URL in the Location: line to find the thing it’s looking for.

But when you resolve the URI for a thing which can’t be expressed as a document, we can’t just say the document has moved because it hasn’t. 303 is the code for “See Other”, or in other words, “I can’t or won’t give you what you asked for, but hey, this URL may be of interest…”. To see it in action, try:

curl -H"Accept: application/rdf+xml" -I http://id.southampton.ac.uk/building/59

You should get something like:

HTTP/1.0 303 See Other

Date: Fri, 22 Jul 2011 08:43:14 GMT

Server: Apache/2.2.14 (Ubuntu)

X-Powered-By: PHP/5.3.2-1ubuntu4.9

Location: http://data.southampton.ac.uk/building/59.rdf

Vary: Accept-Encoding

Connection: close

Content-Type: text/html

Wait a minute; why the -H bit?

Thanks for asking. The -H tells the web server what our preferences for document formats are. If you type http://id.southampton.ac.uk/building/59 into a web browser, or type:

curl -H"Accept: text/html" -I http://id.southampton.ac.uk/building/59

then you’ll get sent to http://data.southampton.ac.uk/building/59.html — which is a useful page for human beings. This is a bit fiddly to set up but really neat as the one identifier is now of use to both humans and machines. Following the identifier to find out more facts is sometimes called “follow your nose”. Humans can do it by hand, but so can software.

But Chris, Why would a URI be useful to machines?

Aha, well that’s getting round to the main point of this article. You notice that in RDF data you have two or three URIs per fact (we call them triples). Here’s some representative examples. The three values are named subject, predicate, object. The predicate identifies the relationship between the subject and object.

<http://id.southampton.ac.uk/building/59>

<http://data.ordnancesurvey.co.uk/ontology/spatialrelations/within>

<http://id.southampton.ac.uk/site/1> .

In this one the object is a “literal”, or a value.

<http://id.southampton.ac.uk/building/59>

<http://www.w3.org/2000/01/rdf-schema#>

"New Zepler" .

In this triple, we actually have a 4th value, which is a data type. You can have any data type you want but the m

ost useful ones are the ones defined in the xsd namespace.

<http://id.southampton.ac.uk/building/59>

<http://www.w3.org/2003/01/geo/wgs84_pos#lat>

"50.937412"^^<http://www.w3.org/2001/XMLSchema#float"> .

This final example uses “rdf:type” which indicates that the subject is in the set of things (or class) represente

d by the object identifier.

<http://id.southampton.ac.uk/building/59>

<http://www.w3.org/1999/02/22-rdf-syntax-ns#type>

<http://vocab.deri.ie/rooms#Building> .

I just used an abbreviation, back there, for rdf:type. Generally, in examples (and in real documents) the URIs for predicates and classes are abbreviated using a namespace prefix. The most common namespaces have well established prefixes so we use them as a short cut, but never forget that the class and predicate identifiers are full web addresses in their own right. Tip: To find the common prefix for a namespace, or namespace for a prefix, use the dead useful http://prefix.cc/

Yes, But why would a machine want to resolve a URI?

There’s a few good examples, but today I’m sticking to what it gets if it resolve a URI for a class or predicate. Often it will get back some triples describing that class or relationship.

This allows a computer to synthesise new triples, using simple logical steps.

Here’s an example…

Your software resolves the URI for the relationship

<http://data.ordnancesurvey.co.uk/ontology/spatialrelations/within>

defined by the UK ordinance survey. If you put it into a web browser you just get HTML back, however if you either use curl: (the -L means don’t show me the headers, and follow all see-other redirections)

curl -L -H"Accept: application/rdf+xml" http://data.ordnancesurvey.co.uk/ontology/spatialrelations/within

…or you can use a web based tool to view it as RDF.

it states that this relationship is transitory and the inverse of

<http://data.ordnancesurvey.co.uk/ontology/spatialrelations/contains>

From that our software can deduce that if room http://id.southampton.ac.uk/room/59-1257 (our seminar room) is “within” http://id.southampton.ac.uk/building/59 and that building 59 is within the Highfield Campus (http://id.southampton.ac.uk/site/1) then… drum roll please… Seminar Room 1 is “within” Highfield Campus and therefore Highfield Campus “contains” our Seminar Room 1. One small loop of code for a machine, one giant leap for machine kind.

This might not seem like much, but it’s a huge step. It does not require custom code for each dataset.

Some other bits of semantics you can get back are that a class is a subclass of another class. Example; see the bit about buildings in the document you get back if you resolve the Building class described in the triples above. So, as a different example to keep it interesting, your data says that David Beckham is a Professional Footballer, and your software can then resolve the URI which represents “Professional Footballer” and learn that it is a subclass of “Professional Athlete”, which it can resolve to discover that all professional athletes are of class Person. It can then deduce that David Beckham is also of type Person.. who knew?

A more complex case is to restrict the domain and range of a predicate. That is the class, or set of classes, which are “legal” before and after the predicate. For example, the