I’m not very good at taking holidays. Every year my line manager has to remind me to take some leave. The problem is that I actually enjoy my job. My friends now all have jobs or families so there’s not people I can just casually hang out with if I take some days off. I don’t have kids, so don’t have that kind of need for structured and planned holidays.

I’ve found some ways of actually taking and enjoying leave, and they may be of interest to others.

The key issue is my martyr-like belief that everything will go horribly wrong if I don’t pay attention to what’s going on at work.

That is, sadly, quite true. I’ve been away from the office for about 2 weeks (counting in the Repository Fringe unconference). In that time, I’ve had a few urgent work things:

- To review the final version of a job description we need to get out urgently

- The place my immediate team were relocating to got changed to somewhere less desirable. If I’d not intercepted that we might have had 33% less floor space than initially agreed, for the next year or two.

- An enquiry about requirements for another possible position, which would directly relate to my area of responsibility. (but as sorting out stuff for jobs takes ages, that’s less worrying)

- Being asked to sign off on a description of a training event I’m running in October (asked at 5pm to sign off on it for next day).

- Uploading data into a new system so people can start working with it ASAP.

Most of these can be achieved via half an hour’s attention, and I feel much more relaxed for knowing they are in hand rather than fretting. Some of these really did require my urgent attention, and if I’d not answered the emails I would be the one who ends up in the worse situation.

These new positions are a result of our work being seen as a success by various powers, which is great, but some care and attention is needed to ensure they actually achieve the actual intent behind creating them.

The trade off is that I’m not often in the office at 9am on work days.

Email Triage

I’ve been getting more and more organised about how I handle emails. On average I get maybe 80 a day now, which is much lower than the 150 of a few years back.

My first strategy has been to unsubscribe from everything I don’t really care about. I actually *do* want emails for “you have a direct message on twitter”, and if you message me on facebook it emails me so I don’t have to monitor my facebook inbox. However I’ve lots of random twitter accounts and these I have told to stop emailing me updates. Anything with an “unsubscribe” link gets clicked before I delete it. God damn you and your call-for-papers.

The key change has been to move to a two-tier inbox system. I now use my INBOX only for actually incoming messages, and they are either deleted, moved-to-archive or moved-to-TODO. Even if it’s just a case of “I need to read that but it’ll require my full attention” it goes into TODO. About 95% of messages get deleted or read and moved to archive.

The problem with this is is that it’s actually still quite a pain to move a message to a folder. When Thunderbird introduced a much better search tool I gave up using lots of folders for my email archive and just use the “a” key which moves the email to archive/2013 (the current year). This still left me with the faff of dragging stuff into the TODO folder and so I looked into how to speed this up.

Dial “T” for “TODO”

I spent a bit of time looking into custom keys for Thunderbird and found “keyconfig” which does exactly what I want. It runs some javascript when you press a key (or key combo). In this case I’ve mapped “T” to:

MsgMoveMessage(GetMsgFolderFromUri(“imap://cjg@imap.ecs.soton.ac.uk/_TODO_”))

(My TODO folder is called _TODO_ so it sorts first, alphabetically).

This means I can now triage my inbox 3 or 4 times a day in a very short amount of time, even when on leave. It means I know I’ve got a bunch of stuff in my todo list, but none of it urgent. Sometimes I leave genuinely urgent stuff in the INBOX, but it’s only one or two items so I don’t have that feeling of amorphous dread that I used to get from knowing some of those 300+ messages were important.

Vacation Message

I’ve noticed that after a couple of weeks away from the office, my vacation auto-messages are beginning to have an impact. People know I’m away and are emailing me much less. I’m seriously considering next time actually putting a “I’m going on leave” into my .sig file a week before hand next time I take a long leave.

Delegation

I don’t like the idea of leaving people hanging. Some of the emails I get are about serious problems and if these are only addressed to me (not cc’d to someone else), I’ll reply to say I’m on leave and cc someone else on the team who might be able to deal with it. This way I don’t have that nagging sense of guilt that my taking leave is causing problems. (yeah, martyr complex yadayada)

Ventnor Fringe

This is the key part of how I’m actually keeping from worrying too much about what’s happening back in the office. I’ve volunteered to help at the Fringe festival in Ventnor, the Isle of Wight town where I grew up. Much of this involves some similar work to what I used to do for Dev8D. You’ll notice the programmes look quite similar: dev8d, vfringe. The vfringe one is a much more developed version of the tools, but it’s also been heavily hacked.

At my suggestion, Joe (the VFringe Webmaster) used Drupal for this year’s site. He’s done some great work configuring it and is using it as a database of events and a way to manage memberships & volunteers. Also, because it’s a full CMS, most of the core team can log in and update details.

Being an alleged “web expert”, I’ve done my best to be helpful without treading on the toes of the young people organising the event. I’m here to help, not take over. This year I’ve come a whole week before the festival starts (staying with friends to keep the costs down) and that has worked much better than trying to do stuff remotely. My concerns about whether or not they really even want my help was allayed when the first thing they did when I showed up was given me a full-administrator account!

In previous years, we’ve used a Google Docs spreadsheet to collect the data for themes, locations and events. This year we’re pulling the list of events directly from the Drupal site. (I’ve made a custom view containing all the data I need, then munge that into usable data). There’s a few issues. The key one is that while an event can have multiple start times, the node-type allows only one location. Also, Joe used a free-text-tagging system for the start times, which has produced a variety of horrors. A mix of 4pm 4:00pm and 4.00pm. Most of them could be read with a well-tuned regular expression, and the last handful I fixed by hand. If we had it over, what we’d really like is if we could have a list of performances, per activity, where each one has a distinct start date+time, end date+time, venue and prices. Chances are someone may have thought of this in the past, if not, it’s a handy plugin to make.

I spent a couple of days just refining my fancy views of the programme, like the day planner, a all-in-one-page programme designed to be saved on your mobile device in case of connectivity issues, and a “what’s on now/next” animation which appears on the homepage. All my bits and bobs use RDF & Graphite as a backend, but that’s just my usual toolbox, nobody here will be consuming this open data except me.

I spent the best part of a day using a pub wifi and keying in all the little events and bar opening times etc. into the site, so that they all appear in the programme when people are planning their day. This was done while drinking some nice, but not-to-strong, beer, and with a view over the beach to the channel. This doesn’t sound like everyone’s ideal holiday but it gives me something tangibly useful to do outside of work and that helps me actually relax. It’s not very difficult, I’m good at it, and every hour I spend doing the grunt web-work is an hour I’m freeing up for one of the organisers to, well, organise.

I also spent half a day helping decorate one of the festival bars. A bit of honest and useful exercises right on the seafront is also a refreshing and satisfying way to spend my time.

I also ended up handling an issue of video encoding for them. They made a 2 minute video to be screened on the Red Funnel ferries, so that people on their way from Southampton to The Island will be made aware of the Fringe Festival. It was a bit of a nightmare, and I’m glad it was me dealing with it. The correct encoding, should you ever need to make an advert for the ferries, is an mpeg-encoded .avi file.

This is not a technology-focused festival. It’s about poetry, art, music and drama. Hence most of the organisers are very different to what I’m used to in Library, Data and Programming events. But it also means that my specialisms go much further and I can save them from work, and do things they just plain couldn’t do otherwise.

If I could change one thing, it would be to buy them all little policeman-style notebooks, with general notes and a note page for things they each need to do morning/afternoon/evening each day leading up to and during the festival. They rely too much on their memories and this makes them stressed as their memories of all the stuff they have to do is a bit like my relation to my INBOX a couple of years ago. There’s tons to remember, and a few really important things, but it turns into a stress-inducing blob in memory. They are not great at keeping people informed of what’s going on, and that’s a hard skill to master. A key thing is getting back to people when you said you would, even if there’s no news. That way people know they are in the loop. Otherwise they fret as they assume they’ve been forgotten. It’s a tricky relationship as there’s over 300 people involved in various capacities, and for each group, their performance is absolutely the most important single thing to them, but only one of many for the festival organisers.

Did I mention that the oldest organiser of this 4 day, 21 venue festival is only about 22 years old? The work they are doing is fantastic and I’m proud to be able to help.

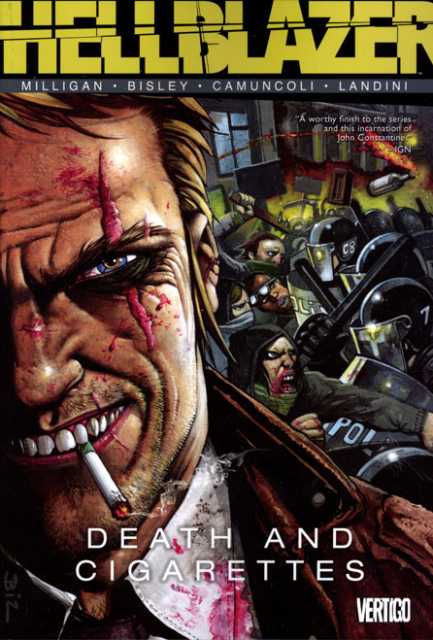

Today I’ve done must of my helping so I’m off to the Woodland Bar, they’ve built in the street where I used to live, and will finish off my comic. It’s the last story in a comic which has run for 25 years. I’m not anticipating a happy ending for John Constantine. This evening I’ve got tickets for a magic and burlesque show and after that there’s a lock-in at Ventnor library with spoken word performances compered by Dizraeli of Dizraeli and The Small Gods fame. I worked my way through the science fiction and computer programming books as a teenager. You kids with wikipedia don’t know how damn lucky you are!

Today I’ve done must of my helping so I’m off to the Woodland Bar, they’ve built in the street where I used to live, and will finish off my comic. It’s the last story in a comic which has run for 25 years. I’m not anticipating a happy ending for John Constantine. This evening I’ve got tickets for a magic and burlesque show and after that there’s a lock-in at Ventnor library with spoken word performances compered by Dizraeli of Dizraeli and The Small Gods fame. I worked my way through the science fiction and computer programming books as a teenager. You kids with wikipedia don’t know how damn lucky you are!

Volunteer

To get back on topic, I think finding something useful to do with your leave is really effective as a way to stop worrying about work. I know at least three techies who have worked on shows at the Edinburgh Fringe. I think there’s real value and satisfaction in us dev’s using our skills in volunteering & charity work rather than just donating a monthly amount or doing unskilled labour for them.

So I’ve actually had some genuinely refreshing leave. I’ve learned a bit about Drupal, and haven’t just spent my whole time playing Minecraft or in the pub.

Now I’ve just got to find a way to use up another 14 days leave before they expire on October 1st…