We’ve been thinking a lot about research data and how to manage it, how to open it, how to share it, and how to get more value from it without making too much extra work. For some time I’ve been considering two different ways to think about research data.

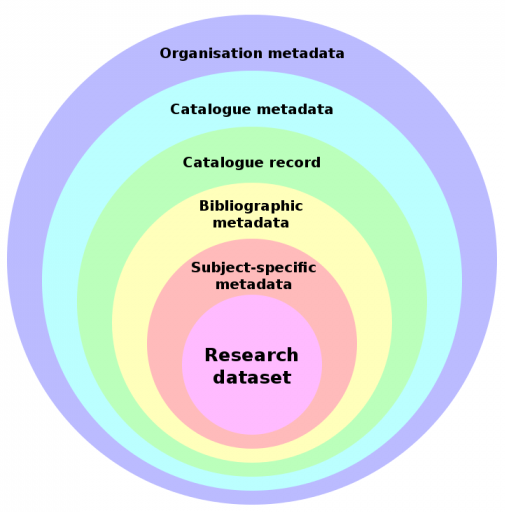

The Onion Diagram

The first is this diagram, which shows the various layers of metadata which I see as surrounding a research dataset.

Some of these layers are less obvious than others. The important thing is that each layer is created at a different time and process, has a different purpose and different people are responsible for it.

Often these layers are merged into a single database record, but it’s useful to think about them as distinct layers when looking at how to manage them.

As I’m most familiar with EPrints, I’ve included examples of where this information would be handled in that software if it was being used as a repository + catalogue for research datasets.

1. Research Dataset

This is the actual dataset produced as part of a research activity. It may be tiny or huge. It may or may not be available from a URL. In rare cases it may not be digital (a hand written log book of results). It might be the weird file format that the lasercryomagnoscopeatron produces, an excel spreadsheet or a hundred XML files. It may make sense to only a few people or tools.

If you are using EPrints as a dataset store+catalogue, this would be a document attached to an EPrints record.

2. Subject-specific Metadata

This will be provided by the researcher, and research communities will need to decide what goes in this metadata. Librarians can certainly advise and assist but the buck stops with the researchers. This layer provides the research context for the dataset, it may include information about the processes used, the type and configuration of equipment. Long term, I expect equipment manufacturers to be able to create much of this and output it with the raw dataset, similar to how modern digital cameras embed EXIF data in the JPEG images they create.

This might be as simple as a text description of anything you might need to know before working with the data, such as assumptions made, or the sample size etc, but I suspect we’ll see some fields start to standardise what metadata should be provided for certain types of experiment.

In a subject-specific archive this metadata may be merged with the other layers of metadata but in a institutional repository there will be all kinds of weird and wacky datasets so its important that the people running the data catalogue are not proscriptive about this metadata, although a subject specific harvester may make some rules about what it should contain.

If you are using EPrints, this data would be stored in a supplimentary document attached to the record. A few years back we added a “metadata” document format for exactly this purpose.

I would expect that, in time, subject specific tools would harvest this data from multiple sources and give subject-specific search and analysis tools which would be beyond the scope of the university repository, but easy to implement on a big pile of similar scientific metadata records from many institutions, eg. a chemical research metadata aggregator could add a search by element (gold, lead..) which would be beyond the scope of the front end of the archive where the dataset is held.

3. Bibliographic Metadata

Here we get to most people’s comfort zone. This is the realm of good ol’ Dublin Core. This describes the non-scientific context of the dataset: who created it, what parts of what organisations were involved, when, where and who owns it and what the license is.

With my “equipment data” hat on, this seems like the layer which associates the dataset with the the physical bit of equipment (eg. http://id.southampton.ac.uk/equipment/E0007), the facility, the research group, funder. Stuff like that. Things which the library and management care about, but don’t really matter to Science, unless you are evaluating how much confidence you have in the researchers.

In EPrints this is the metadata which is configurable by the site administrator and entered by the depositor or editor.

4. Data Catalogue Record Metadata

This is any data about the database record. Most of this will be collected automatically. It’s often in the same database table as the bibliographic data but it’s not quite the same thing.

This layer of the onion is stuff like who created the database record, when, what versions there have been. This can generally be created automatically by the repository/catalogue software.

In EPrints this is the fields which the system creates automatically.

This is generally merged with the bibliographic data layer unless you are doing some serious version control, but it is a distinct layer of metadata.

5. Catalogue Metadata

These last two layers are not really considered most of the time, but if we want things to be discoverable and verifiable it’s helpful to quantifiable.

This is the layer of metadata about the data catalogue itself. Not all data catalogues actuallycontain the the dataset, they may have got the record from another catalogue.

Anyhow, this layer tells you about what the catalogue contains, broadly, and the policies and ownership of the catalogue itself.

In EPrints this would be the repository configuration such as contact email, repository name, plus the fields which describes policy and licenses which many people don’t ever bother to fill in. You can see this data via the OAI-PMH Identify method.

6. Organisation Metadata

This is something which nobody has given that much thought to yet, but for data.ac.uk we’ve proposed that UK universities should create a simple RDF document describing their organisation, with links to key datasets, such as a research dataset catalogue, and other datasets which may be useful to automatically discover. This allows the repository to be marked as having an official relationship to the organisation. Some more information is available from the equipment data FAQ.

Peeling the Onion

The last step is to make the Organisation Profile Document (layer 6) auto discoverable, given the organisation homepage. This means you can verify that an individual dataset is actually in a record, in a repository, which is formally recognised by the organisation (as oppose to set up by a stray 3rd year doing a project, or a service with test data etc). Creating and curating these layers provides auto-discovery and probity in a very straight forward manner.

You make a number of very good points, especially in your emphasis on describing the catalogue and the organisations. In fact, the whole landscape around datasets, including also vocabularies for people, places, periods of time, subjects, etc., needs to be better thought through. In the environment of Linked Data, persistent references to a lot of those ‘things’ are needed to be able to link stuff together.

On your ‘onion’ model, I would argue that it is not a layered but rather a modular model. It’s not really that one view is more ‘central’ than the other. Each of your layers are different perspectives of the same thing. You’ll have descriptive metadata (what it is), all kinds of relationships (what it is connected with), provenance metadata (where did it come from, how did it change over time), administrative metadata (how it is managed), preservation metadata (how it is kept over time), structural metadata (how it is organised internally), permissions and obligations, and probably more. These different modules are of interest to different people but together they make up the environment that the dataset operates in.