There’s two open data communities — the quick and dirty JSON & REST crowd, who do most of the actual consuming of data, and the holier-than-thou RDF crowd (of which I myself am a card carrying member).

What’s good about RDF? – it’s easy to extend indefinitely, you can trivially combine data into one big pile, it has the locations of the data sources built into it (sometimes!?) as the identifiers themselves, it’s self-describing without the risk of ambiguity you get in CSV or JSON etc. and there’s simple ways to synthesise new data based on rules (semantics), like all things in set “Members of Parliament” are also in set “Person” (allegedly).

So why do people keep asking for JSON? Becuase it’s so less intimidating. It is basically just a serialised data-structure any programmer is already familiar with. It’s like XML with all the unnecessary bits cut away. Any first-year programmer can do a quick web request, and have data they can get their head around.

For hard core data nerds (like me) the power of RDF is awesome, and fun to play with, but there’s a learning curve. This was why I was inpsired to write Graphite which is a PHP library for doing quick stuff with small (<10000 triples) graphs. I also then used it to create the Graphite Quick & Dirty RDF browser because I needed it to debug the stuff I was producing and consuming and the existing viewers didn’t do what I wanted.

I think one of the reasons data.southampton has been very successful as for almost every dataset we’ve made at least one HTML viewer — for buildings, points-of-sale (or service), rooms, products (and services), organisation elements, bus-stops etc. These mean the data in the site in instantly valuable to people who can’t read raw data files. And sorry guys but I don’t believe that just converting the results of a SPARQL DESCRIBE query on the URI is good enough for anyone who’s not already an RDF nerd. eg. http://ns.nature.com/records/pmid-20671719 (admittedly, this is the fall-back mode for things on data.southampton we’ve not yet made pretty viewers for…)

So here, in brief, is my recommendation for what you should plan for a good organisational open data service (and I confess, we’re not there yet — but you can learn from our mistakes). I’m not going to comment much on licenses or dataset update policy etc. this post is about formats and URIs.

data.* best practice

RDF should be the native format, although some data may be stored in relational databases rather than a triple store, it should still have an RDF presentation available. Licenses should be specified. If you don’t have a license (due to vagueness from decision makers, use http://purl.org/openorg/NoLicenseDefined to state this explicitly. Give information on who to contact about each dataset, and how to send corrections to incorrect data, possibly using the oo:contact and oo:corrections predicates we defined for this purpose.

RDF should be the native format, although some data may be stored in relational databases rather than a triple store, it should still have an RDF presentation available. Licenses should be specified. If you don’t have a license (due to vagueness from decision makers, use http://purl.org/openorg/NoLicenseDefined to state this explicitly. Give information on who to contact about each dataset, and how to send corrections to incorrect data, possibly using the oo:contact and oo:corrections predicates we defined for this purpose.

URIs

Everything is given a well specified URI, and if you’re minting the URI you do it on a website reserved for nothing but identifiers. Ideally, if your organisation homepage is www.myorg.org then you define official URIs on id.myorg.org — I know the standard is data.myorg.org/resource/foo but I think it lowers the cognitive gap to use one domain for URIs and one for documents.

RDF for individual resources

Every URI should have an RDF format available (I like Turtle, RDF/XML is the most common, and ntriples is easiest to show to new people learning RDF). I suggest supporting all 3 as it costs nothing given a good library.

HTML for individual resources

Every URI should have an HTML page which presents the information in a way useful to normal people interested in the resource, not data nerds. The HTML page should advertise other formats available. In some cases the HTML page may be on the main website, because it’s already got a home in the normal website structure — in this case it should still mention that there is data available. The HTML may not always show all the data available in other formats, but should as much as makes sense. It should use graphs & maps to communicate information, if that’s more appropriate.

JSON for individual resources

In addition to the RDF you should aim to make each resource available as a simple JSON file to make it easy for people to consume your data. Data.southampton.ac.uk does not do this yet, and I feel it’s a mistake on my part.

KML for individual resources

If the resource contains the spatial locations or shapes of things, please also make a KML file available. I’ve got a utility that converts RDF to KML (please ask for a copy to host on your site) and I’m working to make it do 2 way conversions between KML and WKT in RDF.

Lists of Things

It’s certain you’ll have pages which present lists of resources of a given type (buildings, people etc.). These pages should be laid out to be useful for normal people, not nerds. At the time of writing, our Products and Services page has got a bit out of hand and needs some kind of interface or design work. All HTML pages containing lists should have a link to a few things. First of all, if the list was created with a SPARQL query, link to your SPARQL endpoint so people can see and edit the query. This really promotes learning. This is in-effect a report from your system. I recommend putting in any additional columns which are easy, even if they’re not needed for the HTML page, as this makes life much easier for people trying to consume your data — it’s easier for a beginner to remove stuff from a SPARQL query than to add it.

I also recommend making the results available as CSV (we had to build a gateway to the 4store endpoint as it doesn’t do it natively. Adding CSV means the list can load directly into Microsoft Excel, which will make admin staff very happy. Our hacked PHP ARC2 SPARQL endpoint sits between the public web and the real 4store endpoint. It can do some funky extra outputs including CSV, SQLite and PDF (put in for April 1st). Code is available on request, but we can’t offer much free support time as we’ve got lots to do ourselves.

You might also want to add RSS, Atom or other formats which make sense.

APIs

Once you’ve got all that you’ve got a very powerful & flexible system, but there’s no reason not to create APIs as well. My concern with an organisation creating an API to very standard things (like buildings or parts of the org chart) is that you lose the amazing power of Linked Data. An API tends to lock people into consuming from one site, so an App built for Lincoln data won’t work at Southampton.

Making APIs takes time, of course, as does providing multiple formats. That said, once you’ve got a SPARQL backend, it should be bloody easy to make APIs. In fact, if it’s an open endpoint, you can make APIs on other people’s data!

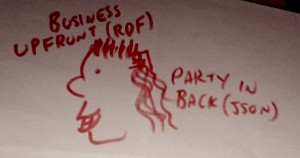

RDF Business Up-Front, JSON Party in-the-Back

So that’s my Open Data Service Mullet; provide RDF, SPARQL, Cool URIs for the awesome things it can do that other formats can’t, but still provide JSON, CSV and APIs everywhere you can because that’s easier for keen people to do quick cool stuff with.

One Response

Stay in touch with the conversation, subscribe to the RSS feed for comments on this post.

Continuing the Discussion