Archive for the ‘Uncategorized’ Category

Ethnography 3 – Methodologies & Analysis no comments

Researcher: Jo Munson

Title: Can there ever be a “Cohesive Global Web”?

Disciplines: Economics, Ethnography (Cultural Anthropology)

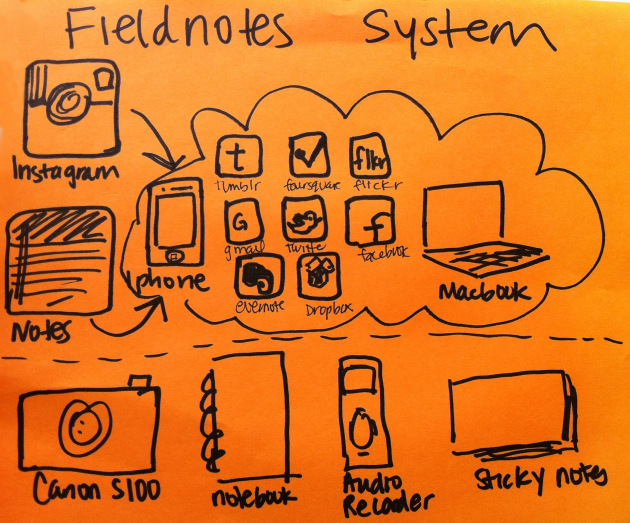

How one modern Ethnographer uses technology to perform fieldwork.

Methodologies in Ethnography

The primary method of collecting data and information about human cultures in Ethnography is through fieldwork, although comparison of different cultures and reflecting on historical data is also important in Ethnographic methodology. The majority of Ethnographic research is qualitative in nature, reflecting its position as a social science. Ethnographers do however make attempts to collect quantitative data, particularly when trying to take a census of a community and in comparative studies.

The methods used to collect information can be broadly categorised as follows:

Fieldwork methods:

- Observation, Participant Observation & Participation – a feature of nearly all fieldwork, Observation can vary from a high level recording of events without interacting with the community to becoming wholly immersed in the community. The latter can take months or even years and will usually require the Ethnographer to learn the language of, and build relationships with the locals.

- Survey & Interview – surveys can be structured with fixed questions (often used at the start of a fieldwork placement), or unstructured, giving the interviewee an opportunity to guide the direction of his or her answers.

Comparative methods:

- Ethnohistory – Ethnohistory involves studying historical Ethnographic writings and ethnographic or archaeological data to draw conclusions about an historic culture. The field is distinct from History in that the Ethnohistorian seeks to recreate the cultural situation from the perspective of those members of the community (takes an Emic approach).

Unlike Observation / Participation and Survey, Ethnohistory need not be done “in the field”. Ethnohistory has become increasingly important as it can give valuable insight in to the speed and form of the “evolution” of societies over time. - Cross-cultural Comparison – Cross-cultural Comparison involves the application of statistics to data collected about more than one culture or cultural variable. The major limitations of Cross-cultural Comparison are that it is ahistoric (assumes that a culture does not change over time) and that it relies on some subjective classifications of the data to be analysed by the Ethnographer.

Sources of bias

The sources of bias in Ethnographic data collection can be substantial and often unavoidable, some of the most common are:

- Skewed (non-representative) sampling – samples can be skewed for many reasons. Sample sizes are often small, so the selection of any one interviewee may not be representative of the population. The Ethnographer can also only be in one place and will often make generalisations about the whole community based on the small section he or she interacts with. The Ethnographer is also limited to the snapshot in time that he or she observes the community.

- Theoretical biases – the method of stating a hypothesis prior to investigation may cause the Ethnographer to only collect data consistent with their viewpoint relative to the initial hypothesis.

- Personal biases – whilst Ethnographers are acutely aware of the effect their own upbringing may have on their objectivity (think Relativism), this awareness does not stop prior beliefs having an effect on data collection.

- Ethical considerations – Ethnographers may uncover information that could compromise the cultural integrity of the community being observed and may choose to play this down to protect their informants.

Interpreting Ethnographic research findings

Whilst there is no consensus on evaluation standards in Ethnography, Laurel Richardson has proposed five criteria that could be used to evaluate the contribution of Ethnographic findings:

-

Substantive Contribution: “Does the piece contribute to our understanding of social-life?”

Aesthetic Merit: “Does this piece succeed aesthetically?”

Reflexivity: “How did the author come to write this text…Is there adequate self-awareness and self-exposure for the reader to make judgments about the point of view?”

Impact: “Does this affect me? Emotionally? Intellectually?” Does it move me?

Expresses a Reality: “Does it seem ‘true’—a credible account of a cultural, social, individual, or communal sense of the ‘real’?”

These reflections, alongside the statistical output of quantitative or Cross-cultural Comparative study can be used to reform Ethnographic theories and gain insight into human culture.

Next time (and beyond)…

The order/form of these may alter, but broadly, I will be covering the following in the proceeding weeks:

Can there ever be a “Cohesive Global Web”?Ethnography 1 – Introduction & DefinitionEthnography 2 – Disciplinary ApproachEconomics 1 – Introduction & DefinitionEconomics 2 – Disciplinary Approach, the Big TheoriesEthnography 3 – Methodologies & Analysis- Economics 3 – Modelling & Methodologies

- Ethnographic Approach to the “Cohesive Global Web”

- Economic Approach to the “Cohesive Global Web”

- Ethno-Economic Approach to the “Cohesive Global Web”

Sources

The American Society for Ethnohistory. 2013. Frequently Asked Questions. [online] Available at: http://www.ethnohistory.org/frequently-asked-questions/ [Accessed: 31 Oct 2013].

Umanitoba.ca. 2013. Objectivity in Ethnography. [online] Available at: http://www.umanitoba.ca/faculties/arts/anthropology/courses/122/module1/objectivity.html [Accessed: 31 Oct 2013].

Peoples, J. and Bailey, G. 1997. Humanity. Belmont, CA: West/Wadsworth.

Richardson, L. 2000. Evaluating Ethnography. Qualitative Inquiry, 6 (2), pp. 253-255. Available from: doi: 10.1177/107780040000600207 [Accessed: 31 Oct 2013].

Image retrieved from: http://ethnographymatters.net/tag/instagram/

Economy and Open Source no comments

We could define Economy as an infrastructure of connections among producers, distributors, and consumers of goods and services in one community. On the other hand, we could state that the Internet is a global network that connects public and private sectors in different ecosystems : industry, academia and government.

There is no doubt about the potential of Internet in the economy of a country because it can minimize the transaction cost of goods and services, creating efficient and productive distribution channels, developing clusters of specific areas, increasing supply and demand and providing more consumer choices.

For this reason, technology plays a crucial role for the decision making process because it helps us to create strategies and to develop solutions. In the last two decades proprietary or closed source software dominated the industry, causing high costs derived of licenses and patent use, restricting users to modify, redistribute or share their products. Furthermore, the use of reverse engineering in private software result in a penalty or even jail, inhibiting innovation and software development.

Nowadays, public and private sectors in any domain provide the opportunity to use open source software as an alternative in order to increase security, reduce costs, improve quality, develop interoperability, customization, but mainly to uphold independence and freedom.

Some people think that open source software is not a real choice because there is not technical or financial support; however, in these essays we will study the open source phenomena and their implications in the global economy.

First, let start by defining the term open source:

According to the Open Source Initiative, (1):

“Open source doesn’t just mean access to the source code. The distribution terms of open-source software must comply with the following criteria:

1.-Free distribution

2.-Source code

3.-Derived works

4.-Integrity of the author´s source code

5.- No discrimination against persons or groups

6.- No discrimination against fields of endeavor

7.-Distribution of licenses

8.-License must not be specific to a product

9.-License must not restrict other software

10.-License must be technology neutral

In the same way the Open Source Paradigm (2) explains that:

In an ecosystem composed by academic institutions, companies and individuals that come to the idea to solve a specific need through software development. Mostly, the initial project is done by just one entity and subsequently is released to the community in order to share and to continue the development or the knowledge. If the software is useful, others members make use of it and just when the algorithm is solved by the software development and it is useful to others the circle of Open Source paradigm is completed.

A good example of the use of Open Source Paradigm is the Apache Web Server because it had been built by a group of people who needed to solve the their web server problems. This group were kept in touch by email and they worked separately, but they were determined to work together and coordinately and finally their first release were in April 1995.

Furthermore, the Open Source paradigm promotes paramount economic benefits. For instance, Open Source allows better resources allocation, it can dispense the risk and cost related to projects and it gives the control to users allowing them to customize it according to their needs.

In summary, we can say that, Open source encourages people to work together toward a specific goal and the desire to create solution, promoting innovation and software development.

References:

1.-Open Source Initiative [n.d.] Open Source Initiative [online]

Available from: http://opensource.org/osd [Accessed 05 November 2013]

2.-Bruce Perens (2005) The Emerging Economic Paradigm of Open Source [online] George Washington University. Available from: http://perens.com/works/articles/Economic.html [Accessed 12 November 2013]

What to study in Computer Science? no comments

After looking for a reasonable definition of Computer Science and whether it can be considered a discipline last week, I wanted to focus on the different approaches to study the field this week. Initially I tried to do this with the help of an introductory book to Computer Science: Computer Science. An overview by Brookshear. I found that Brookshear explains more the different aspects of computers that can be studied. Therefore, I also looked at two other texts. Still, approaches in Computer Science do not seem as commonly separated as in Psychology. However, in the end of this post I will look at some approaches.

Brookshear starts in his book with explaining that algorithms are the most fundamental aspect of Computer Science. He defines an algorithm as “a set of steps that defines how a task is performed.” Algorithms are represented in a program. Creating these programs, is called programming (Brookshear, 2007: p. 18). Brookshear explains later in his book that “programs for modern computers consist of sequences of instructions that are encoded as numeric digits (Brookshear, 2007: p. 268).

Fundamental knowledge to understanding problems in Computer Science is to see how data is stored in computers. Computers store data as 0s and 1s, which are called bits. The first represents false, while the latter represents true. According to Brookshear, this true/false values are named Boolean operations (Brookshear, 2007: p. 36). Computers do not just store data, they also manipulate it. The circuitry in the computer that does this, is called the central processing unit (Brookshear, 2007: p. 96). Next to manipulating, data can also be transferred from machine to machine. If computers are connected in that manner, networks, this can happen (Brookshear, 2007: p. 164). The Internet is a famous example of an enormous global network of interconnected computers.

Computer Science has several subfields that are seen as separate studies. An example of this is software engineering. Brookshear claims that it “is the branch of computer science that seeks principles to guide development of large, complex software systems”(Brookshear, 2007: p. 328). Another example is Artificial Intelligence (AI). Brookshear explains that the field of AI tries to build autonomous machines that can execute complex tasks, without the intervention of a human being. These machines therefore have to “perceive and reason” (Brookshear, 2007: p. 452).

Tedre gives a couple of examples of other subfields in Computer Science, but does not say that those subfields are the only or even most important ones. He sums up complexity theory, usability, the psychology of programming, management information systems, virtual reality and architectural design. Tedre argues that there should be an overarching set of rules for research in these fields. However, later he also argues that computer scientists often need to use approaches and methods from different fields, because this is the only way in which they can deal with the amount of topics in Computer Science (Tedre, 2007: p. 107, 108). Computer scientists thus seem to deal with the difficult task of incorporating many different fields, but at the same time they need to learn how to use a similar set of rules to study these fields.

Denning et al. define the most clear set of subfields in Computer Science: algorithms and data structures, programming languages, architecture, numerical and symbolic computation, operating systems, software methodology and engineering, databases and information retrieval, artificial intelligence and robotics, and finally human-computer communication. They justify their selection, because every one of those subfields has “an underlying unity of subject matter, a substantial theoretical component, significant abstractions, and substantial design and implementation issues” (Denning et al., 1989: p. 16, 17).

Tedre argues, based upon the research of Denning et al., that there have been three ‘lucid’ traditions in Computer Science: the theoretical tradition, empirical tradition and engineering tradition. He argues that because of the adoption of these different traditions, the discipline of Computer Science might have ontological, epistemological and methodological confusion (Tedre, 2007: p. 107).

I can conclusively argue that it is not easy to present a concise image of Computer Science. After having difficulties last week in finding one proper definition for Computer Science, this week I had problems in finding comprehensive ways in which Computer Science is studied. Next time, I will look at how Psychology and Computer Science can be used to study online surveillance. Later, I will also look how the two disciplines overlap.

Sources

Brookshear, J. Glenn. Computer Science. An Overview. Ninth Edition. Harlow: Pearson Education Limited, 2007.

Denning, P. et al. “Computing as a discipline”. Communications of the ACM 32(1), 1989: p. 9-23.

Tedre, Matti. “Know Your Discipline: Teaching the Philosophy of Computer Science”. Journal of Information Technology Education 6(1), 2007: p. 105-122.

Web Doomsday: How Realistic Is It? no comments

Despite our modern-day dependency on services offered through the web, few of us have given thought into what the consequences to us would be if the web were to disappear. It might simply seem unlikely, and not worth planning for. However, there are many potential causes, intentional or not, for widespread loss of access to the web. I will outline some of these in this post and will argue that it is realistic and that we as a society should be prepared.

The size of the technical infrastructure required to deliver the web to our fingertips is huge. The larger a system, the more points of failure. Because the system is stretched over a large geographic area, where there are climate extremes, natural disaster is a large risk. Storms, earthquakes and erosion can easily break vital equipment. Such a large system also lead to scope for technical failures. The Northeast United States blackout of 2003 left 55 million people without power, for many as long as two days. This was caused by a software bug.

Much of the internet infrastructure was designed before there was significant demand for the web. Technical limitations have already affected the performance of the web. We ran out of IPv4 addresses (which each person requires to connect to the internet) in 2011, and ISPs have been slow to adopt IPv6 to solve the problem. The technical infrastructure may also be prone to attack, whether it be through cyber warfare (e.g., military assault) or malicious intent (e.g., hacking commercial infrastructure).

There may be political and commercial motivations behind changing the way we can access the web. The Great Firewall of China prevents people in China from accessing a huge portion of the web. Commercial motivations include ISPs prioritising particular services (e.g., Comcast’s proposals to degrade high-bandwidth services such as NetFlix), or companies choosing to change of discontinue services (e.g., Google withdrawing Reader).

Whether it be storing our photos with a cloud service or becoming reliant on a social network for communicating with friends, depending solely on these presents a risk to ourselves, in that loss of the web or these services will potentially be detrimental to our lives. The next post will be a case study into the Northeast blackout of 2003, in which a huge power cut led to widespread panic, and will consider the societal and economic impact of the event.

Basics of economics no comments

Fundamentals of economics

Looking at some early work on the subject, Adam Smith (the man with the invisible hand fetish … ‘it wasn’t me, it was my invisible hand…’) famously defined economics as “an inquiry into the nature and causes of the wealth of nations” (1776), whilst Alfred Marshall painted an attractively casual picture of the “study of mankind in the ordinary business of life” (1890). Robbins (1932) is credited with a classic definition:

“Economics is the science which studies human behavior as a relationship between given ends and scarce means which have alternative uses.”

This does seem to capture the fundamental economic preoccupations of ideas of wants, choice, and scarcity quite well, but leaves me feeling a bit depressed about everything. Nevermind … on to the fundamentals bit:

Economists place a fundamental assumption about human nature at the heart of their discipline: that human wants are unlimited, and that people are driven by the satisfaction of these wants. The fact that the world in which we live is characterised by a limited amount of resources on which humans can draw means that humanity must compete for resources in conditions of scarcity. Scarcity is defined as “the excess of human wants over what can actually be produced to fulfil these wants” (Sloman, 2009:5). The resources for which we can compete are termed the factors of production, and are divided into three forms: labour; land and raw materials; and capital.

Economics, then, is concerned with the distribution of goods and services in these circumstances. The level of wealth among individuals or groups inevitably varies, so economics may examine how or why wealth is distributed in certain ways, or even look at methods in which different distributions of wealth might be achieved.

The interplay of the forces of supply and demand play a major role in economic analyses, to the extent that they “lie at the very centre of economics” (Sloman, 2009:5). The constant tension between these forces is expressed (in free or market economies) via the price mechanism, which responds to changes in the relationship of supply and demand (as a result of choices made by individuals and groups in an economy). If shortages occur, prices tend to rise, whereas surpluses allow prices to fall. In an idealised model of a market, an ‘equilibrium price’ can be reached in which the forces are balanced. Various signals and incentives help the operation of the price mechanism within and between markets.

The influence of shortages and surpluses on price affects consumers and producers in various ways, as the economic model of the circular flow of goods and incomes demonstrates. In this model, households (consumers of goods and services) buy goods and services from firms (producers), whilst firms buy labour, land or capital (the factors of production) from households, who might be compensated with wages, rent, or interest, for example. This ensures that incomes and goods continue to flow in the economy in the different markets which operate within it.

An important distinction in economics is between analysis of the overall processes and levels of activity of an entire economy on the one hand, and analysis of particular aspects of the economy on the other. The former is known as macroeconomics, whilst the latter is called microeconomics. Macroeconomics focuses on overall or aggregate levels of supply (output), demand (spending), and levels of growth (whether positive or negative) in an economy.

Macroeconomics examines overall supply and demand levels in order to explain or predict changes in levels of inflation, balance of trade (relationships of imports/exports), or recessions (periods of negative growth). In contrast microeconomics might focus on specific areas of economic activity (the production of particular goods or services), production methods, and the characteristics of particular markets. Microeconomics also examines the relationships between particular choices and the costs associated with them. Important concepts in this respect include opportunity cost, and marginal costs and benefits. When a decision is made, the opportunity cost is viewed as the best alternative to the actual course of action taken – “the opportunity cost of any activity is the sacrifice made to do it” (Sloman, 2009:8). This is an important consideration as it helps to determine the implications of economic decisions which need to be made. Economists assume that individuals make choices according to rational self-interest – making a decision based on an evaluation of the costs and benefits. This involves attention to the marginal costs or benefits of a decision – the advantages or disadvantages of increasing or decreasing levels of certain activities (rather than just deciding to do or not do something – the total costs/benefits).

As human understanding of the complex nature of the world and our activities within it has increased, economists have been forced to broaden the scope of their analysis to include certain social and environmental consequences of economic decision-making. Decisions which satisfy rational self-interest for individuals, groups or firms may nevertheless lead to outcomes such as pollution or extreme inequality which themselves have wider consequences for society and the economy as a whole (whether nationally or globally). Indeed, Sloman (2009:24) states that “[u]nbridled market forces can result in severe problems for individuals, society and the environment”. Growing recognition of these potential problems may help explain contemporary concern with sustainability, the role of government regulation of industry, and the creation of the concept of ‘corporate social responsibility’. As such, economists are asked to take account of economic and social goals of a society (as expressed through their government), and therefore contribute to their knowledge to help policy makers work toward these goals.

References

Library of Economics and Liberty (2012) What is economics? Available from: http://www.econlib.org/library/Topics/College/whatiseconomics.html [Accessed 10th November, 2013]

Sloman, J. (2009) Economics. 7th ed. Harlow: Pearson

Sloman, J. (2007) Essentials of Economics. 4th ed. Harlow: Pearson

Whitehead, G. (1992) Economics. Oxford: Heinemann

An Introduction & Initial Overview of Philosophy no comments

An Introduction & Initial Overview of Philosophy:

Philosophy is centrally a consideration of logical argument and reasoning offset in consideration of wider questions about every aspect of the universe. Philosophy is often misinterpreted as a loose collection of outlooks on life. Actually, it is about testing arguments to exhaustion in order to validate stances or viewpoints. An example of this is to consider the following statement that ‘murder is wrong’. Philosophy helps us to explore the notion of wrongness, considering why we define it as wrong and under, by counter critical argument, it could be right. It also asks us to consider alternative structures to the universe where, for example, murder may be considered right. Furthermore it asks us to consider about the idea of justifications- who is to say what is and is not right or wrong? Likewise can we apply the idea of universal principles to any situation and therefore form absolutes? As such you could say that Philosophy is the search for absolutes in unending open-ended questions- a search for specifics.

Socrates, Plato, Nietzsche, Hume, Descartes and Sartre are all Philosophers of noted mention; each contributes historical stances that have shaped schools of thought within the subject. Sartre, perhaps, is one of the less known key philosophers in his contributions to the field. A noted example is his branching of Philosophy and Literature, a good exemplification of the inter-disciplinary intrinsic nature of the subject that is a key underpinning process; the discipline is not so much a discipline, but a collection of different ways of thinking that consider a variety of options and inter-relate to one another.

Sartre’s novel ‘Nausea’ is a key example of an inter-disciplinary text; it explores philosophical stances of existentialism, questions about the nature of existence and our purpose within it, as well as a psychological literature that explores the barriers faced by individuals in a city-novel, which encapsulates and therefore enables relation to, for the reader, similar comparisons to their own lives. Through the novel Sartre offers a insight into how such influences create angst in the individual, the weight of the world gradually bearing down on them due to a variety of contextual factors unique to the city and individual. Through this he terms the idea of existential angst, opening up a range of existential themed emotions that relate to common fears and feelings about being fundamentally alone in a universe.

Such angst occurs from negative feelings and setbacks that are part of the experience of human freedom and responsibility. As a result such a novel demonstrates a more potentially reflective mirror to ourselves as it is read, considering how in the instance of the protagonist, their slip into depression, self-centred obsessed and eventual near-insanity enables us to draw reflections of our own barriers in everyday life that influence us to reflect negatively upon our existence. It also acts as a cautionary tale, shaping a consideration to how we should act and why. This is not so different to my initial example about how we define the notion of wrongness and murder- the justifications for our own decisions in life affecting what we consider to be acceptable or influencing our motivations, actions and responses as a result.

There are several key themes in Philosophy that are explored as a question of focus: God, Right and Wrong, The External World, Science, Mind, Art, Knowledge. All of these are more commonly integrated into specific schools of thought and summarised in a structured order that shapes the discipline itself; many, are in fact, interconnected and not singular subjects in their own right and each can be loosely affirmed into commonly related topics such as Reality, Value & Knowledge. In my next blog, I will consider more about the structure of Philosophy and arguments relevant to the topic explored for this report.

References:

1. Sartre, J.P, (1964). Nausea. New York: New Directions.

2. Warburton, P., (2012): Philosophy-the basics. London: Routledge.

Are Physical Geographers concerned with the Digital Divide? no comments

For this weeks reading, I have focused on Physical Geography. I have attempted to learn about the key concerns and research angles Physical Geographers focus on, and identify mean that they might consider and possible approaches towards the Digital Divide. Initially this may sound bizarre, why would the Physical Geography be relevant for an essay on the Digital Divide. Hopefully by the end of this blog post, you will have a better understanding as to why I have selected this subject, and have a clearer picture for my focus towards this essay in terms of Physical Geography and the Digital Divide.

Physical Geographers are concerned with:

1) Understanding the world better; how processes have become how they are; testing and refining theories related to these processes (Example processes include tectonic activity, climate change and the biosphere).

2) Understanding the effect we (humans) have on the environment from living in it and drawing the natural resources from it.

3) Predicting future changes of the environmental change, as well as measuring and monitoring these changes

4) Understanding how to manage and cope with the Earth’s systems and its changes

Geographers study the Earth in two periods of time; Pleistocene and Holocene. The Holocene Period, 11700 years ago to the present, is a significant period of time where humans have colonised globally, forming and building upon new relationships with the environment, for example agriculture and deforestation. Humans have taken control of plants and animals (genetic engineering and domestication) and over the years farming communities have rapidly grown. Agriculture, particularly farming, has enabled technological innovation to take place. As the more farming communities developed, the more food they could produce and provide for other societies that have little/no food production and instead focus on technology development. However, this has enabled the ability for humans to shape and transform the planet further and has brought consequences including soil erosion and impoverishment. Deforestation affects the eco system; it releases more Carbon Dioxide (CO2), a greenhouse gas, into the atmosphere. CO2 is a significant factor for global warming, effecting the temperature of the Earth, and currently concentrations are higher. This Climate Change is a great concern and research aspect for many Physical Geographers. Studies demonstrate that humans are contributing greatly to the issue of global warming; for example burning fossil fuels and biomass interferes with the Global Carbon Cycle. This is evidenced by the global climate models used. They demonstrate that when anthropogenic production of greenhouse gases is included in the stats, signs of global warming then appear. Developing countries makes up 5/6ths of the human population and will keep on increasing in population. This will result in burning more fossil fuels. Even if the richer countries have stabilised and decreased dependency on them, it will not necessarily be enough for protecting the environment. However if renewable resources are used and pushed by the developing countries, this may have a better impact of development for the environment.

Physical Geographers believe the future depends on the social, political and economic development but predicting these impacts is difficult.

This research has left me pondering on the following points:

1) To improve the digital divide it is only going to encourage humans to carry out current processes that affect the environment such as further deforestation to create more urban areas, and further fossil fuel burning to be able to use the technologies and carry out functions that are all deemed to better living standards?

2) Do the physical geographers actually want the digital divide to vanish – or at least not until it is known that it can be bridged without affecting the environment severely? As shown by the new NIC’s India and China, they are currently globalising at such a rate and without consideration for their emissions which are greatly impacting the environment.

3) Can it only get worse regarding the impact on the environment, to enable it to get better in terms of the digital divide? But then will it be too late to save our planet?!

4) Will closing the Digital Divide enhance Poverty instead of improve? -will it be a vicious circle of developing countries trying to develop, urbanise and become more technology based, a necessity for living (food) will be a struggle due to lack of food production countries, and as the environment will also be affected, it will be difficult for those farming communities still in existence to still be able to farm, thus preventing everyone that benefits/relies upon food production from others, to actually not improve/maintain quality of life and increase poverty?

Economics 2 – Disciplinary Approach, the Big Theories no comments

Researcher: Jo Munson

Title: Can there ever be a “Cohesive Global Web”?

Disciplines: Economics, Ethnography (Cultural Anthropology)

John Maynard Keynes, revolutionary Economist and inventor of “Keynesian Economics”

Major Economic theories

Recall our second definition of Economics, that highlighted the concept of and importance of choice – where our desires may be infinite, but the availability of resources is finite:

[Economics is] the study of how people choose to use (scarce) resources.

This concept leads to one of the fundamental theories of Economics, also known as the “Economic Problem”. The Economic Problem arises precisely because there are finite resources in any economy. Choices therefore have to be made.

The problem with choosing any one course of action is that the benefits you could have received by taking an alternative action are forgone. This is known as the “opportunity cost” of an action. If you knew what the outcome of each possible action would be, it would be easy to minimise the “opportunity cost”, but this is rare in practice.

The challenge of any economy is to minimise the opportunity cost and make the best use of the scarce resources available to it. American Nobel Prize winning Economist Paul Samuelson suggested that an economy should seek the optimum answers to the following questions:

- What to produce?

- How to produce?

- For whom to produce?

How economies approach these questions and how firms and individuals behave has been debated by Economists since the inception of the Discipline. Some of the key theories / theorists are outlined below:

- Adam Smith’s Invisible Hand – In the 1770s, Adam Smith proposed the idea that economies function best when markets are left to make their own choices about how to allocate resources. This has come to be known as the ‘Free Market’. Smith argued that markets will naturally correct any imbalances (as if guided by an Invisible Hand) and supply will necessarily cater to demand. The Free Market Economy is in direct contrast with the concept of a ‘Command Economy’, where governments choose how resources are allocated with the marketplace.

- Marxian Economics – Karl Marx was less optimistic about market’s ability to self-govern, believing that workers in a Free Market were not compensated for the labour and value of the goods they produced, but only for their labour. The surplus value would then be creamed off by the employer whilst the labourer is left with just enough to survive. Marx indicated that if a worker was forever trapped in this cycle it “would make him at once the lifelong slave of his employer”.

- Keynesian Economics – John Maynard Keynes formulated his theories against the backdrop of the ‘Great Depression’ in the 1930s. He advocated the need for governments to intervene to lessen the duration and negative effects of economic cycles inevitable in a Free Market. Keynes believed governments should control their spending so that during periods of economic growth, taxes are increased, welfare spending is decreased and the cost of borrowing money (interest rates) increase so that when an economy enters recession, it has the ability to lower taxes and interest rates and increase welfare spending in order to stimulate a faster economic recovery. Keynesian ideas formed the basis of Macroeconomics.

There are numerous other schools of thought in Economics, but these three form a good basis from which to work. Next I will look at how these theories are applied in Economic research.

Next time (and beyond)…

I’ve had a quick reshuffle of the order, but broadly, I will be covering the following in the proceeding weeks:

Can there ever be a “cohesive global web”?Ethnography 1 – Introduction & DefinitionEthnography 2 – Disciplinary ApproachEconomics 1 – Introduction & DefinitionEconomics 2 – Disciplinary Approach, the Big Theories- Ethnography 3 – Methodologies & Analysis

- Economics 3 – Models & Methodologies

- Ethnographic Approach to the “Cohesive Global Web”

- Economic Approach to the “Cohesive Global Web”

- Ethno-Economic Approach to the “Cohesive Global Web”

Sources

Gillespie, A. 2007. Foundations of economics. Oxford: Oxford University Press.

Wikipedia. 2013. Economics. [online] Available at: http://en.wikipedia.org/wiki/Economics [Accessed: 31 Oct 2013].

Image retrieved from: http://www.pbs.org/wnet/need-to-know/tag/john-maynard-keynes/

Intertwingularity: Schools of Literary Theory no comments

Intertwingularity: Schools of Literary Theory

In short: “We all disagree”

Following on from my last post, here I outline some of the better-known schools of thought in Literary Theory – or more precisely Western Literary Theory. This is a diverse eco-system of different schools of thought, often created by rejection of contemporary views, or by dividing off within existing schools. As little in common exists outside the individual schools of thought, these discrete loci of theory provide the real ontology and epistemology of this discipline. It also shows why authors struggle to provide an overview of the discipline, given the fragmentation. In looking at schools of thought, notice that Literary Theory and Literary Criticism are deeply intertwined.

Romanticism (Late 18C to c.1850) and Aestheticism (19C – Romantic Period)

This movement represents both a revolt against the social and political norms of an aristocratically directed society and the emerging rationalist scientific approach towards Nature. The aesthetic experience was linked to strong emotion and valued spontaneity, looking to engage the power of imagination. Clearly liberalist in approach, unsurprisingly it was linked to political radicalism and nationalism, feeding into the unification movements of Europe at the time. Jumping forward to the present era there are some contemporary voices, such as Harold Bloom, echoing this movement by protesting against the modern mode of political and social ideologies being projected back onto literature and thus obscuring its aesthetics.

(American) Pragmatism (Late 19C)

Pragmatism emerged as a school of thought in the United States around 1870. For pragmatists, the thought is a functional method for achieving action, problem solving and prediction. Thus the meaning of an idea or a proposition lies in its observable practical consequences rather than in terms of representative accuracy.

Formalism, New Criticism, Russian Formalism (1930s-present)

Formalism looks at the structural purpose of a text, setting aside outside influences. It rejects, or sets aside during analysis, the notion of cultural and societal influences. It looks at the ‘literariness’ of the text, the verbal/linguistic strategies used to make it literature. It is in part a reaction to preceding Romanticism, seeking to take deliberately different viewpoint.

Russian Formalism started in St Petersburg in 1916 but almost immediately fell foul of the ideals of new Soviet Communism, although its ideas seeded later schools of thought.

Meanwhile in the United States, Anglo-American ‘New Criticism’, emphasised ‘close reading’ (sustained interpretation of brief texts) to see how literary items function an aesthetic objects. This process of close reading has since been inherited by many later schools of Literary Theory and Criticism.

Phenomenological Criticism (early 20C)

Whilst more closely linked to Literary Criticism than pure Theory, the Phenomenological approach has influenced later schools of thought. It avoids ontology and epistemology, concentrating on a meaning residing in the consciousness within which the work resides. This is to understand its experience rather than to explain its structure – an existential interpretation. Notable in this area are Martin Heidegger, John-Paul Satre and the study of existentialism.

Structuralism/Semiotics (1920s-present)

Structuralism’s roots lie in early 20C with the writings of Ferdinand de Saussure (who laid the foundations of semiotics) and the linguistic circles of Prague and Moscow at that time. However, it later gained momentum in the 1950-60s as an intellectual movement in France. It argues literature can be understood by means of a structure distinct from the ideas within the literature. It uses the language of semiotics (‘signs’) for this structured analysis of texts. Indeed, although Structuralism and Semiotics are different studies they are, to the general observer, close enough to consider as facets of the same thing. Both sit in counter-point to the Existential/Phenomenological approach.

Post-Structuralism/Deconstruction (1966-present)

As structuralism became more formalised, people found reason to distance themselves from it. One reason was that Structuralist works didn’t conform to the idea of structuralism. As theories interact with the things they describe, they maintain it is impossible to completely describe a complete semiotic system as the described subject is ever-changing. Theorists such as Lacan, Barthes and Foucalt identify themselves as post-structuralists.

Deconstruction (1980s-present)

Deconstruction takes the latter further by decrying the Western social constructs surrounding much of extant theory. Even in description, it makes the concepts above look easy and approachable. The best, accessible, short summary I’ve seen is in the Merriam-Webster Dictionary: “a theory used in the study of literature or philosophy which says that a piece of writing does not have just one meaning and that the meaning depends on the reader” [1]. Most descriptions are recursively obscure, though seemingly part of Deconstruction is to be inaccessible to the casual reader.

Reader-Response Criticism (1960s-present)

This school focuses on the reader of the work as focus of analysis. Within this context, some focus on the individual reader’s experience (‘individualists’), others assume a generality of response across all readers (‘uniformists’) and a third group prefer to abstract and look at defined sets of readers (‘experimenters’).

Psychoanalytic Criticism, Jungian Criticism(1930s-present)

A completely different angle of approach is to come at Literature from the perspective of Psychology, and the role of consciousnesses and the unconscious. In turn, this has let to derived schools like Archetypal theory and Jungian studies. In each case the school of thought clusters around a narrower focus of analysis and criticism within a Psychological viewpoint.

Politico/Social Schools of Thought

A different strand of schools of thought arose from situating the theory within a particular political or sociological context. The trajectory, as with other schools above is to keep re-partitioning. Feminist studies, lead to consideration of gender and in turn to the Gay community. Then come race and colour, the role of colonialism, and further minority cultures. Following a consistent theme of rejection of the status quo, Darwinian study tries to distance itself from post-structural and post-modernist viewpoints and take into account the imperatives of evolutionary theory. Eco-criticism reflects a new awareness of the interrelationship of humans with their habitat; what ‘Nature’ and an examination of ‘place’. Below are just some of these schools

- Marxist Criticism (1930s-present)

- Feminist Criticism (1960s-present)

- Gender/Queer Studies (1970s-present)

- New Historicism/Cultural Studies (1980s-present)

- Post-Colonial Criticism (1990s-present)

- Minority Discourse (!980s-present)

- Darwinian study (1990s-present)

- Eco-criticism (1990s-present)

…and so it goes on. No field can be too small, that the discipline doesn’t offer scope for even more tightly scoped study.

Is there consensus?

Whilst the list above shows a dizzying range of approaches to Literary Theory, and some derive from broader predecessors, it is plain that there is no consistent viewpoint between the differing schools of thought. In my next post I will attempt to summarise this in context of the overall topic – does Literary Theory have anything obvious to offer in improving the structure of hypertext.

Footnotes:

Primary Sources:

- Eagleton, Terry. Literary Theory: An Introduction. Oxford : Blackwell, 1996.

- Culler, Jonathan. Literary Theory: A Very Short Introduction. Oxford : Oxford University Press, 1997

- Hawthorn, Jeremy. A Concise Glossary of Contemporary Literary Theory. London: Arnold, 1997.

- Webster, Roger. Studying Literary Theory: An introduction. London: Arnold, 1990. 2nd Edition 1996.

Further web references:

- A Hypertext Structuration of Literary Criticism (accessed 8 Oct 2013)

- Wikipedia – Literary Theory (accessed 4-8 Oct 2013)

- Web resource at Princeton University, with no explicit title or structured index (accessed 8 Oct 2013)

A look into politics no comments

Authors note: the topic for this project has undergone slight change. After looking at several journal articles, wikis and online articles I would like to broaden the topic area from the Web being used for social change, to power relations on the Web. This will still look at social change on the Web but will also include how the government tries to control the Web.

These couple of weeks I started looking into the area of politics. How to study politics seemed to have some contentions between different authors resulting in a lot of different definitions. However, largely politics can be defined as the power relationships that occur daily in social life. More strictly the study of politics can be specifically applied to the effects that arise from governmental actors’ power of society.

Politics could be split into three large approaches, each which contained their own specific theories and methods of analysis.

Traditional scholarship

Traditional scholars generally studied a specific, traditional area of politics such as how a particular country was run, or a particular type of governmental system (democracy, totalitarianism, socialism etc). The scholar’s study these areas by “borrowing” methods from other disciplines, namely history and philosophers.

Social science

Social scientists look at politics from a sociological point of view, focusing on how political institutions affect society as a whole, and looking for ways to improve the future of society. The methods they use to do this stem from social science and therefore can be quantitative or qualitative. In a way, the social science is a practical method as it exists to alter the system in some way instead of just change it, though this is perhaps to a lesser extent than the third approach.

Radical Criticism

Radical critics are very interested in the power relations that evolve within politics and largely view politics in very negative ways. Two key theories of radical criticism include;

– Marxism

This looks at politics largely in class based terms, viewing the higher social classes as opposing the lower social classes, which Marxists view as a grave injustice.

– Feminism

This sees politics as being based on patriarchal, hierarchal assumptions which sees women being excluded and pushed into the home domain.

Politics can be useful to look at how the governmental institutions control the Web, for instance looking into issues such as online privacy (just think of the NSA), issues of copyright (recent news stories over the crackdown of download sites), and control over freedom of speech and what can be viewed online (areas such as Cameron’s pornwall and more worryingly perhaps China’s system). Areas such as Marxist politics might be more interested in changing the system and this might be specifically useful for social change online such as twitter mobs, darknets etc. These are areas that I will look into in more depth.

In the next blog entry I am going to look at how politics and philosophy can complement each other in the area of political philosophy.

References

Kelly, P. ‘The Politics Book’

Tansey, S. ‘Politics the Basics’

Heywood, A. ‘Politics’

Duverger, M. ‘The Study of Politics’

Marx, K. and Engels, F. ‘Manifesto of the Communist Party’

Millett, K. ‘Sexual Politics’