First link.

My father is a mechanical engineer, and he is a professional inventor: he offers a design to a company and the company gets him a workshop, collaborators and salary for this project (in exchange for a patent). Once my father worked on a chemical plant, and the project involved an autoclave. The autoclave had to save a fixed temperature for a few months, so a father’s colleague had connected the sensors of the autoclave to a Rasberry Pi with some SMS gateway software. One time and another my father got an SMS with numbers from sensors and the phrase: “I’m getting cold! Help me!”. Usually, it was in the middle of the night, so we used to think the autoclave as a helpless and fanciful child.

Second link.

A week ago I read an article about a medical experiment: scientists connect brain of paralyzed patient to a basic Nexus tablet — and the patient learned to control it with her brain waves and even googles. So, how do we think this patient, if we meet with her on some social media network? Presumably, we could never know about her disease, as well as about her half-cyber body. [http://singularityhub.com/2015/10/25/scientists-connect-brain-to-a-basic-tablet-paralyzed-patient-googles-with-ease/]. You can find more about the project here: [http://braingate2.org/index.asp]

Third link.

As it was said 20 years ago: “the Internet is for porn”. Today we could say: “The Web is for bots”. They communicate with us via social media, instant messengers and even able to run a twitter account. When I worked is social media monitoring agency, bots were our “big pain”. Our computational linguist wasn’t able to create a reliable algorithm to identify them from the data because they used the words of real users. So, our measurements were less representative. This huge problem was mentioned in DEMOS report “The road to representivity”, and you can find pretty interesting paper about it here: [http://www.pnas.org/content/110/5/1600.abstract] (Caution: the Math!)

Fourth link.

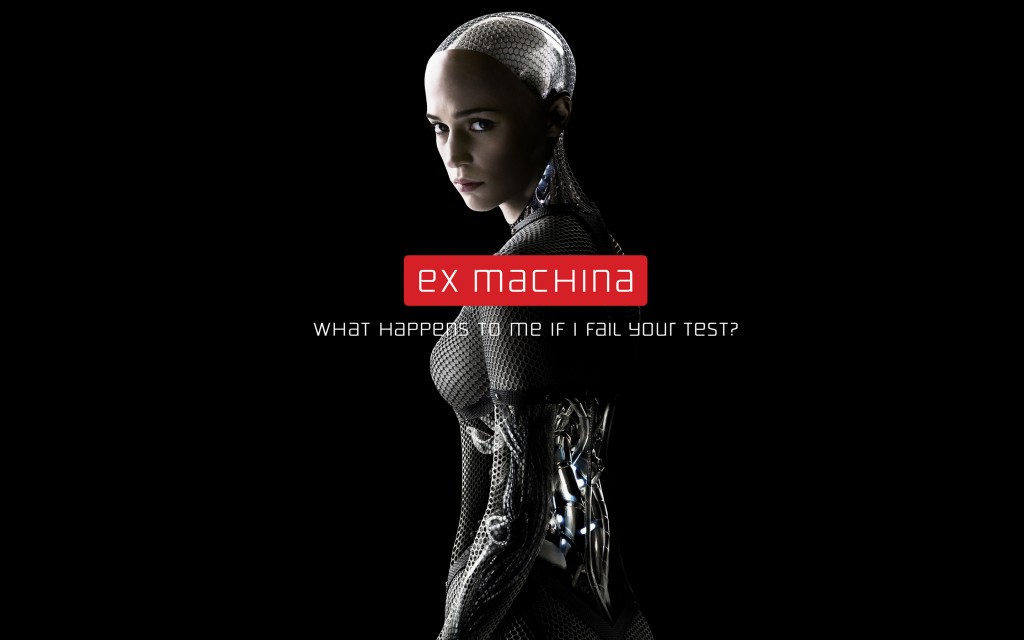

In June 2014 the chatter bot “Eugene Goostman” passed the Turing test — for a first time in the human history. [https://en.wikipedia.org/wiki/Eugene_Goostman]

I introduced these examples as a proof that future from science-fiction books is here: today non-human and cyborgs can communicate with us, and they are part of our daily life. I didn’t mentioned online-gaming practice and Wikipedia bots and many other examples.

So: how do we take them? Do we humanize them? How does it change our life? I think, it is a question for social anthropology/psychology (and gender studies as a part of social anthropology) and computer science. Or linguistic, computational or not? Human-computer interaction?

(Sorry for any language mistakes).

When I read the title of this post, before scrolling down to read the detail, my spontaneous reaction was, “well, it depends on whether we *know* we’re interacting with a non-human”…

And then I wondered if you thought your autoclave ‘child’ was male or female…

The examples you give are about interacting in ways other than by voice – mainly through written language. We are not at the point yet where we can make machines communicate in a human-like way by voice as convincingly as through text…

As well as computational linguistics, you could consider sociolinguistic angles to this.

There are loads of different ways you could take this and I’m afraid I haven’t managed to say anything very useful eg to help you narrow down your choices of disciplines!

““well, it depends on whether we *know* we’re interacting with a non-human”…”

This question was raised by Alan Turing in 1950: “Are there imaginable digital computers which would do well in the imitation game?” and it’s is te fundamental question of The Turing Test: Can we know with whom we speak: a machine or a person. And, as you can see, last year a bot passed The Turing Test: The jury decided that they speak with a human. It is

>”I wondered if you thought your autoclave ‘child’ was male or female”

It’s important thing here: babies and non-humans both haven’t gender.

“The examples you give are about interacting in ways other than by voice – mainly through written language”

Yes, because I choose the examples of communication through the Web, and Web is still mostly text-oriented. Thank you, I should make a mention about it.

Thank you for you comment!

““well, it depends on whether we *know* we’re interacting with a non-human”…”

I suppose I was also thinking of it in terms of whether the situation/service/bot makes it clear that they are not human. In the example of your dad’s autoclave, you all knew it was not really a human and it was not trying to convince you that it was, as in the Goostman example. Do we react differently if we are told we are interacting with a machine? Or is it all in the language? Was it the very human-like way the autoclave cried for help that made you think of it as a child – was it all in the language? If the message had read, “Temp has dropped to …. Please take action,” maybe you wouldn’t have reacted so emotionally!

Yes, of course, it was a good joke from father’s colleague to make this message more “human-like”. And we’ve never seen this autoclave — as well as the source of messages in the other examples. So it is a question of an interface.

Yes, it was my questions too. Or, to be more clear: “Do we tend to anthropomorphize non-human or not and what are the reasons for it? What is more important for our emotional perception?”.

There are a bunch of paper about it in a field of robotics, but most of them focus attention on an appearance of robot — like “Uncanny valley” studies.