The University of Leeds is building a new digital library based on EPrints, and began its investigation by using the Watch Folder produced by the DepositMO project, reporting progress on the use of this tool as a repository partner of DepositMOre. This was an exciting development, and although the Watch Folder was subsequently displaced by a commercial application for workflow reasons at Leeds, it demonstrates how deposit and workflow issues implemented in this project tool have risen up the agenda.

The University of Leeds is building a new digital library based on EPrints, and began its investigation by using the Watch Folder produced by the DepositMO project, reporting progress on the use of this tool as a repository partner of DepositMOre. This was an exciting development, and although the Watch Folder was subsequently displaced by a commercial application for workflow reasons at Leeds, it demonstrates how deposit and workflow issues implemented in this project tool have risen up the agenda.

John Salter, software developer for the digital library at Leeds, answers our questions about the impact of the Watch Folder on the work and how the approach to content deposit it exemplifies has influenced the DL project at Leeds.

Which tool did you use and why?

At Leeds we were setting up a new Digitisation Studio, Digitisation Service and alongside that a new Digital Library, based on the EPrints platform. The studio is tasked with digitising content from our Special Collections (books, slides, manuscripts and other unique resources, such as our theses), the University Gallery, as well as paid-for requests both internal and external to the University. It will be producing a large number of digital objects, mainly contained in folders that represent the object being digitised, e.g. several TIFFs representing individual pages of a manuscript would be held in one directory.

At Leeds we were setting up a new Digitisation Studio, Digitisation Service and alongside that a new Digital Library, based on the EPrints platform. The studio is tasked with digitising content from our Special Collections (books, slides, manuscripts and other unique resources, such as our theses), the University Gallery, as well as paid-for requests both internal and external to the University. It will be producing a large number of digital objects, mainly contained in folders that represent the object being digitised, e.g. several TIFFs representing individual pages of a manuscript would be held in one directory.

For this ‘bulk’ style work, the Watch Folder script seemed like a good place to start, but…

The digitisation landscape at Leeds is an evolving beast. During the period of the DepositMOre project we have purchased another piece of software: KE EMu**. This application will be the canonical source of object metadata: title; creator; copyright; physical preservation (and anything in between), and also manage the incoming digitisation requests and workflow for the Studio. Consequently our process for adding the digital objects to the repository is changing from an almost linear:

Request -> Digitise -> Enhance (e.g. crop) -> Save -> Deposit -> Catalogue

to something more like:

Request -> Digitise -> Enhance -> Save ————\

\-> Catalogue (including checking copyright) -> Deposit

It is important to note that some of the material we digitise cannot be made Open Access. We need to be stringent in checking what rights we have to disseminate this material. Some content we digitise is not for the public domain. Some will allow small thumbnails to be made available, but copies available by request only.

The Digitisaton Studio is still answering many questions:

- what is the *best* format in which to save things for dissemination?

- what should we store?

- — RAW from the camera

- — software-specific versions (e.g. CaptureOne) created before the TIFF

- — any number of intermediate cropped/colour-corrected versions

- — the ‘master’ TIFF (preservation copy)

- — processed versions (thumbnails)

- how to manage the request process to delivery?

and many more. The answers to these questions determine how we design the automated deposit process for items we have created.

Our storage configuration also means that the EPrints server can see the Studio work area which makes the HTTP transport layer less attractive as a means to move items into the repository. If the deposit-by-reference scenario discussed on the SWORD blog gets resolved, it would make our situation a bit more SWORD (and Watch Folder) friendly.

What are the benefits for your repository of using the tool?

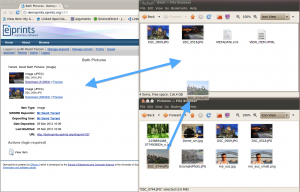

Using the tool would allow new items that the Studio had created to be automatically loaded to the EPrints server. The metadata for the items could then be added by a cataloguer role rather than the digitisation role.

The Watch Folder script could still come in useful for other ingestions. The Digital Library is not just a platform for items that the Library holds. It is a place for the digital content of the University. As we develop the collections we hold, we may well find pockets of data that lend themselves to the Watch Folder process. We may also find people who would find the Word Add-in useful.

What difficulties were encountered in using the tool, and how were these resolved?

The Watch Folder script doesn’t contain much in the way of comments, or debug information. I had to pick it apart to see what it was doing, and where/how it was failing. There are a few assumptions in the code that when combined with the lack of documentation made it difficult to run out-of-the-box, e.g.

my $file = $dir . “CONFIG”;

The $dir variable may well have been specified without a trailing slash – specifying C:\folder results in the file C:\folderCONFIG being read for the config instead of C:\folder\CONFIG.

I don’t have much experience of running perl on a Windows platform. The code does handle ‘windows’ platforms e.g. ‘if ($OSNAME eq “MSWin32”)’ in some places, but do I need to handle different path seperators (‘\’ vs. ‘/’)?

It doesn’t cater for https (and therefore sends passwords over the wire in plain-text). Our digital library uses our standard usernames and passwords (via LDAP), so we need https. There was also a bug in EPrints – the edit URI sent back for an item was http, not https.

Do you have any remaining issues about continuing use of the tool, and if so what are these?

The Watch Folder script is not non-techie friendly. Running something from the command line is not somethng that our average user is very used to. Use of the Watch Folder is best suited to either someone with some technical ability, or possibly run centrally as a service if the files to be deposited were on a shared drive. Currently our standard desktop (Win7) has ActiveState Perl on it, and also includes all modules required to use Watch Folder to an https host so it could be used by most members of staff.

There should be a way to disconnect a directory from the synchronise process in a controlled way. The use of hidden files may make this difficult/problematic.

How is/should the process monitored? Currently the process results in two copies of the content, one local and one in the repository. Ideally we want a process that deposits the files, confirms that the repository file is identical to the sent file (the script does this by MD5 hashing the file), and then removes the original file (possibly leaving a ‘receipt’). The script pulls content from the server as well as pushing changes back to the server. We are dealing with large volumes of data and wouldn’t want it all to be replicated locally.

In EPrints, the thumbnailing process works on the whole image. For some of our content (e.g. illuminated manuscripts – initial letter is beautifully ornate, rest of page is dense text) the automatic thumbnails are not the best (in terms of visual appeal). The pull/push model could be useful for dealing with these thumbnails:

File -> Upload (push) -> Thumbnail -> Download (pull) -> Enhance thumbnails -> Upload Thumbnails

A human could review the automatic thumbnails and replace any with a same-sized version of the most visual parts of of the file. This has its own complications in EPrints – thumbnail regeneration is brutal. It removes all thumbnails and replaces them – and would lose these special versions if they couldn’t be identified in advance.

How much content has the tool delivered to your repository (pointing to some good examples)?

Currently, none. But the use-case in Leeds has outgrown the script. In the long term, most content in our Digital Library will be automatically deposited. Without KE EMu, the Watch Folder script would be a useful tool to achieve this.

**This is the official paragraph for KE EMu:

“KE EMu provides a central store for metadata for Special Collections, the University Art Gallery and University Archive. It will streamline our processes for accessioning new acquisitions to Special Collections, the Gallery and Archive, and thereafter cataloguing them, and helping to manage all aspects of their storage and use within the Library. EMu is compliant with several archival and gallery standards, including ISAD(G) and Spectrum. It provides APIs that will be used when depositing digitised versions into our Digital Library.”

John Salter, University of Leeds

0 Responses

Stay in touch with the conversation, subscribe to the RSS feed for comments on this post.