#WebSci20: Post-workshop report on Socio-technical AI Systems for Defence, Cybercrime and Cybersecurity (STAIDCC20) by Stuart Middleton

Posted on behalf of Stuart Middleton

Posted on behalf of Stuart Middleton

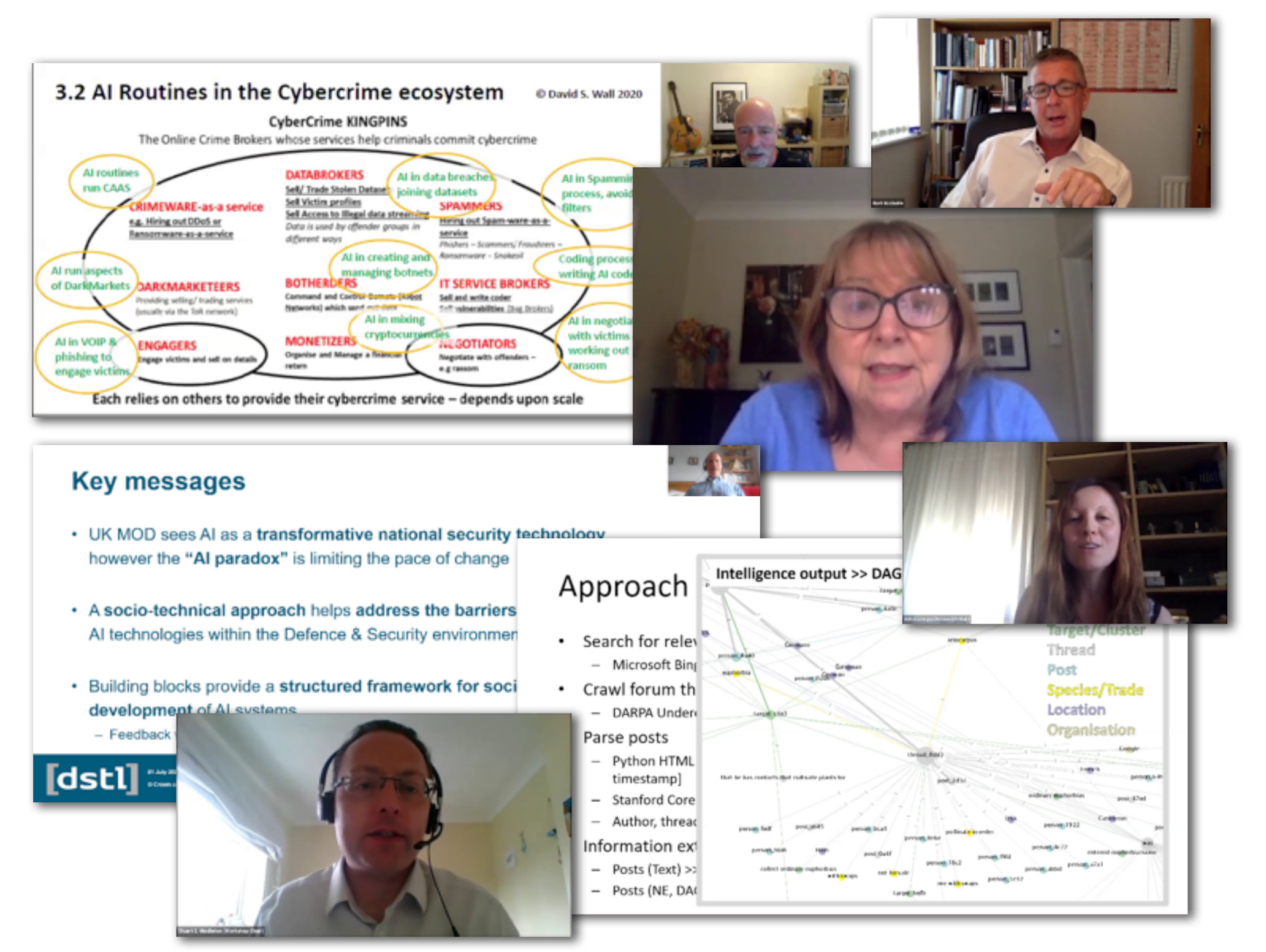

When we put together the STAIDCC20 workshop our aim was to bring together a mixture of inter-disciplinary researchers and practitioners working in defence, cybercrime and cybersecurity application areas to discuss and explore the challenges and future research directions around socio-technical AI systems. The workshop, despite challenges moving to a virtual presentation format, successfully did exactly this. Our workshop provided a platform for researchers and practitioners to showcase where the state of the art in socio-technical AI currently is, discussing the key challenges and future research directions that are most important today.

Keynotes and Oral paper presentations

Prof Steven Meers – Challenges around Socio-technical AI Systems in Defence: A Practitioners Perspective. This keynote explored the threats and opportunities presented by AI and outlined a framework called the “AI Building Blocks” that provides a socio-technical perspective to drive AI adoption in a responsible manner that reduces overall harm.

Prof David Wall – AI and Cybercrime: balancing expectations with delivery! This keynote explored using socio-technical AI systems to deal with cybercrime, identifying key challenges which include balancing expectations with delivery, dealing with the fact that AI is now in the criminal’s playbook, before concluding with the practical realities of using AI for attributing and investigating cybercrime.

Dr Stuart E. Middleton – Information extraction from the long tail: A socio-technical AI approach for criminology investigations into the online illegal plant trade. This paper described an inter-disciplinary socio-technical artificial intelligence (AI) approach to information extraction from the long tail of online forums around internet-facilitated illegal trades of endangered species, empirically showing the value of named entity (NE) directed graph visualizations across two case studies.

Ankit Tewar – Decoding the Black Box: Interpretable Methods for Post-Incident Counter-terrorism Investigations. This paper explored the major challenges of providing explanations of machine learning patterns in human friendly ways to better support decision makers in verifying captured patterns and using them to derive actionable intelligence.

Panel discussion

The workshop panel consists of a mix of practitioners and researchers across the defence, cybercrime and cybersecurity application areas. Representing practitioners, we had Greg Elliot, National Cyber Crime Unit (NCCU) National Crime Agency (NCA), Prof Steven Meers, Defence Science and Technology Laboratory (DSTL) and Mark McCluskie, Nuix, EMEA Head of Investigations. Representing researchers, we had Prof David Wall, University of Leeds, Centre for Criminal Justice Studies and Prof Dame Wendy Hall, University of Southampton, Electronics and Computer Science.

Big questions that were discussed by the panel:

- How do we get trust back? If it is not diverse it is not ethical!

- We are facing an uneven trust landscape: people trust Google and Facebook with enormous amounts of highly personal data but are reluctant to share even limited information with governments. Openness is a way to builds trust. Also linking sharing data to benefits like utility, diversity or health helps.

- How do we deal with the fundamental data problem underlying AI and big data analytics?

- Can we reduce dependency on data through techniques such as semi-supervised learning, context based learning or few-shot/one shot learning? Simulations, synthetic training sets and context learning can help. Better data quality? Maintain provenance links so humans can clean-up datasets and improve quality. AI reviews in the way we currently do ethical reviews. Why share data? Often datasets shared to promote work on tasks with value. AI evidence in court not used today. It is all about human-machine teaming not unverifiable black boxes.

- What practical steps can we take to shape adoption of AI for a positive impact?

- Developing frameworks to guide development of responsible AI systems for Defence and Security applications, training and educating both senior decision makers and early career scientists and engineers and promoting debate and discussion to form international norms for responsible application of AI to defence and security challenges.

- What are the dangers of AI in any part of the criminal justice arena?

- AI does not make decisions, humans do with AI support. Court evidence is not the right place to use AI. Disclosure of processing method. Increasing AI result repeatability would help. AI bias is measurable, human bias is not!

- What threats/opportunities does the anonymous natural of cybercriminal environments present?

- Deep fakes, text and video. AI to detect patterns in fake content. Writing style and author profiling of anonymous posts. Very hard to anonymize OSINT in a multi-modal world. Training bias. Amazon ‘pausing’ police use of facial recognition. Could anonymous datasets for training overcome bias, or would it simply hide bias away?

Resources and more information

Workshop website with links to paper pre-prints, slides and video presentations

Workshop organising committee

Stuart E. Middleton, University of Southampton, sem03@soton.ac.uk

Anita Lavorgna, University of Southampton, A.Lavorgna@soton.ac.uk

Ruth McAlister, Ulster University, r.mcalister@ulster.ac.uk