Twitter harvesting

By Amir Aryaarjmand.

At the Creative DigiFest #SxSC2, Adam Field talked about a ‘Lightweight Twitter Harvester’, featuring his service. I arranged an interview with him to know more about this service and here is the summary of my interview, a tutorial on how to use the service and comments from colleagues working on related initiatives.

Regarding the motivation of building the Twitter harvester, Adam Field said “[twitter] data is ephemeral unless you actively harvest it, it disappears after of couple of weeks; so, what I want to provide is a service, here at the University, for non-technical people who want to harvest small streams of tweets”.

Introducing the harvester

Adam’s Twitter harvest service is an extension that runs on top of ePrints. Prior to Adam’s job with iSolutions, as part of his work for ePrints services he was involved with a number of projects. This Twitter harvest service is one of the capabilities for ePrints that they explored. The current pilot service is for lightweight Twitter harvesting and archiving. As the service is a pilot the use of it is limited for the small events or projects that are no more than 1000 tweets per day. The service has three sides to it: (1) It harvests tweets, (2) It preserves the harvested tweets and, (3) It allows users to download the stored tweets.

A. Create an account

The service is available for all academic staff and graduate students of the University of Southampton. To create an account you need to send an email to Adam Field af05v@soton.ac.uk requesting an account.

B. Login to the service

Once your request has been approved by Adam Field, you can access the service at http://tweets.soton.ac.uk. Click on login and enter the service using your university ID and password.

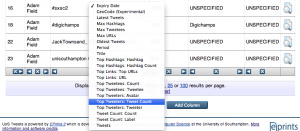

C. Manage Twitter Feed

Logging in will automatically take you to the ‘Manage Twitter Feeds’ page, which lists all feeds currently being harvested by all users of the system. On this page you can:

- Access to the list of items that are already harvesting:

- Filter the items that are already harvesting by ID, userID, Search String, Number of Tweets, Project Title and, Abstract:

- Add a column to the list of items that are already harvesting:

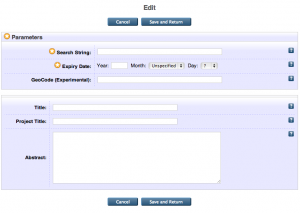

D. Create an Item

To create a new item or new harvest project, click on the ‘Create an Item’ button, which will load the ‘Edit’ page. The most important field is ‘Search String’ that you can enter your search term for the service to harvest related tweets.

The search terms can be:

- Twitter username like, @sotonDE OR sotonDE

- A hashtag like #SxSC2

- Or any keyword or a combination of keywords like, (University OR Uni) AND (Southampton OR Soton)

Another mandatory field called ‘Expiry Date’ requires you to enter a date on which the feed should stop being harvested.

All other fields are optional, however putting a value in the ‘Project Title’ field will allow you to group similar tweetstreams together in the browse view. When you give the service a search string it gets passed to Twitter which returns any tweets that match that since the last time the search was run. The service runs this search every 20 minutes or half an hour to download tweets.

E. Explore an Item

You can get all information about the item you created for harvesting and archiving by either going to its link, which is created by the service called ‘Twitter Feed’ or by clicking on the icon in front of the item in ‘Manage Twitter Feeds’ page.

On the Twitter Feed page you can see:

- Tweets, in chronological order

- Top Tweeters

- Options for exporting the feed

- Top Hashtags

- Top Mentions

- Top Links

- Tweet Frequency

For instance, here is the screen shot of the ‘Twitter Feed for #SxSC2’ generated for the Creative DigiFest event:

F. Export the Feed

The service stores all of the downloaded tweets in a database and generates a kind of a landing page for each set of tweets that it gives an overview of the stream of tweets and allows them to be downloaded in two main formats: CSV and JSON. The service is bound by the Twitter API terms of use, which specify no redistribution of tweets. Therefore, the downloaded data are only for University of Southampton research use. However, visualisations generated from the data, and the IDs of the tweets themselves may be published. Two other options for downloading the feed are HTML and Wordle. Wordle is a simple visualisation application; you can see the Wordle generated based on word frequency in the Twitter feed for SxSC2 here:

Other work on twitter harvesting

Mark Borkum, a University of Southampton Postgraduate researcher, and his colleagues have also been working on twitter archiving using a different approach:

“Twitter archiving is a burdensome beast. In our opinion, the most important aspects of data archival are lossless persistence, and the incorporation of semantics. When data is obtained from a third-party provider, such as Twitter, our primary objective is to ensure that it is stored in its most general form, with the appropriate metadata, to ensure that it can be reused and repurposed in the future. To achieve this, we leverage knowledge representation technologies, including the Resource Description Framework (RDF) and the Web Ontology Language (OWL).

“In the case of Twitter, the data is provided in a format called JavaScript Object Notation (JSON). Hence, one of the goals of our work is to provide a mechanism for the transformation of JSON documents into RDF graphs – a project that has been imaginatively dubbed “JSON2RDF”. Using JSON2RDF, the data transformation is described programmatically, and is incorporated into larger software projects as if it were a black box (with JSON going in, and RDF coming out).

“Once the data has been transformed into RDF, a smorgasbord of pre-existing tools, techniques and technologies may be brought to bear upon it, e.g., by performing post-processing on the data; by visualising the relationships between data items; or, by integrating the data with other sources. All of this is possible because we took the decision to archive the data in its entirety, at its point of entry into our system.

JISC DataPool

The JISC DataPool project is examining methods including those described above for long-term hosting of social media relating to University of Southampton research. You can learn more about the project on the JISC DataPool blog.

Links

http://repositoryman.blogspot.co.uk/2011/10/using-eprints-repositories-to-collect.html

A training seminar on the twitter harvester took place on 11 December 2012.

Ramine Tinati is working extensively on twitter harvesting, analysis and visualisations under the aegis of the Web Observatory.

More details of the methods employed by Ramine are introduced in the following eprint.

Identifying communicator roles in Twitter

Identifying communicator roles in Twitter

Tinati, Ramine, Carr, Leslie, Hall, Wendy and Bentwood, Jonny (2012) Identifying communicator roles in Twitter. In, Mining Social Network Dynamics (MSND 2012), Lyon, FR, 16 – 20 Apr 2012. 8pp. (Submitted). […]

Video showing evolving tweet network by @raminetinati