Archive for October, 2012

Introductions: Technoethics no comments

Moor on Ethics & Technology

Moor (2005) believed that with an increase in the social impact of technological advances, the number of ethical challenges also increase. He suggested that this is a result of the ever-increasing novel uses of technological advances and a lack of ethical inquiry into these activities. For example, the Web has fundamentally altered the social contexts within which we live, work and play. It has raised several questions including some about the legitimacy of human relationships maintained using its platform, its facilitation of illegal activity on a large scale and the privacy of its users.

However, though these are very present and pressing societal issues, they are also vast areas for investigation, and there is relatively sparse research coverage. Also, an added dimension to this challenge is that technological advances seem to continuously change the standard ethical questions. Thereby researchers are forced to simultaneously attempt to explore the ethical implications of these technologies as they were, are now and could be in the future.

Technoethics: Tackling New Types of Ethical Questions

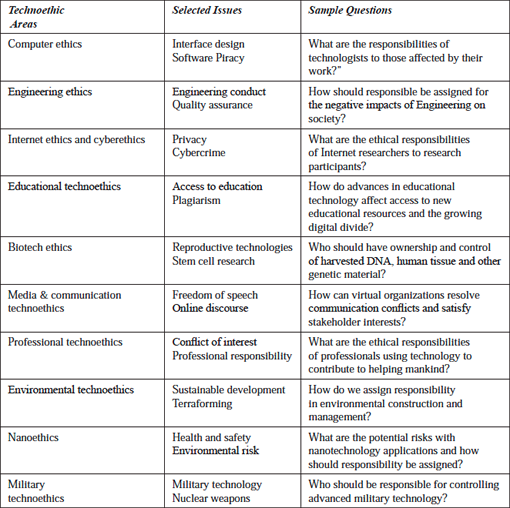

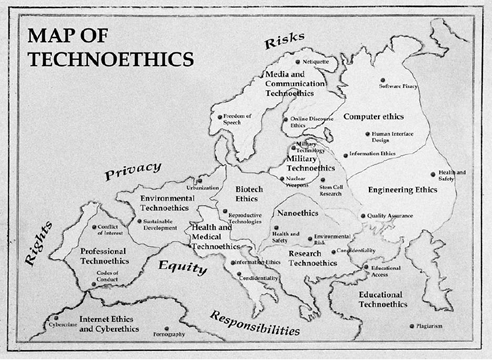

Unsurprisingly, many of the current debates surrounding technological advancement are addressed by technoethics (TE). They are some of the most challenging issues that scientists, innovators and technologists will face. These challenges usually characterise the roles these individuals play when plotting an ambitious route for the future. They inadvertently raise important questions about rights, privacy, responsibility and risk that must be adequately answered.

However, due to the variety of questions resulting in as much ethical inquiries that are based in different fields, there is a tendency for this knowledge to be somewhat disconnected. TE pulls this knowledge together around the central idea of technology. Moreover, unlike traditional applied ethics where focus is placed on an ethical concern for living things, TE is ‘biotechno-centric’. As the Web is to Web science, technology, as well as living things, are central in TE.

The Technoethics Way

Tracing its origins back to the late 1970s, TE emerged as an interdisciplinary field tasked with the job of answering the difficult questions posed earlier. Coined by Mario Bunge (1977), TE does not only assign technical responsibility to technologists but also gives them social and moral responsibility for their technological innovations and applications. It is believed that technologists are responsible for ensuring that their technologies are optimally efficient, not harmful and that its benefits are lasting.

This field distinguishes itself from other branches of applied ethics by elevating technology to the level of living things. However, it also builds on knowledge from wider ethical inquiry as well. TE has many sub-areas, for example, Internet, computer, biotech, cyber and nano ethics.

Calling All Web Scientists

Since the Web’s creation years ago, an understanding of this ever-changing phenomenon and its impact has been developing at a rapid pace in many disciplines. Yet there is a great deal more to learn, especially when the Web is positioned as the subject of focus. Considering Bunge’s views on technoethics, I believe that this obligation rests with Web scientists who are technically, morally and socially responsible for Web innovations and applications.

As a budding Web scientist, thinking about how technoethics applies to Web science, I am absolutely fascinated by all the very interesting questions that could be asked and that I could work towards answering. Ultimately, for us Web scientists, the aims of technoethics could be achieved by gaining insight into the relationship between people, the society and the Web, and how these entities impact each other.

Notes

This & Last Week’s Plan

Identifying the simplest books to read that will give an easy to understand introduction to the disciplines I picked.Making notes on books read.Prepare a blog post that gives an overview of what I want to work on.Publish a blog post that introduces technoethics.- Publish a blog post that introduces risk management.

Outline a reading plan for moving forward.

Introductory Readings

Ethics: A Very Short Introduction– Simon BlackburnHandbook fo Research on Technoethics– Rocci Luppicini & Rebecca AdellRisk: A Very Short Introduction– Baruch FischhoffFundamentals of Risk Management Understanding, Evaluating and Implementing Effective Risk Management– Paul Hopkin

EWIII: Philosophy and Law no comments

This week, I did some general reading across 3 books, though I only read one of them completely. The book I read completely was Gillies and Cailliau, How the Web was Born. This is a really good general history book of the web and just computers generally. For a non-technical and very interesting overview of where the web comes from I recommend it. They talk about TimBL’s adventures, Apple, Microsoft, Mozilla, Marc Andreeson and so on. This is a great book for someone who knows absolutely nothing about computers.

The 2 other books that I had a general look through as background reading were Boyd & Richerson, Culture and the Evolutionary Process, and David Bainbridge, Introduction to Computer Law. Boyd and Richerson are sociologists – sort of. Most sociologists hate them – and most psychologists as well, I should point out. However I think they’re great. Just because they’re unpopular doesn’t mean they’re crazy. B&R were trained as ecologists but they practically created a new ‘scientific sociology’ area all by themselves. Basically, they thought that most sociology was philosophical hogwash, and so they wanted to create something more scientific. They tried to create a theory of culture and society that was heavily informed by relevant sciences, in their case especially by the biological evolutionary sciences, particularly population genetics. They describe a theory of how information of different kinds may move through a population of people, and then they propose some equations that may be able to describe how this happens. These equations are heavily inspired by similar recursion equations proposed in population genetics, which describe how certain kinds of genetic information move through certain kinds of populations under certain conditions over periods of time. I think this is really interesting stuff. Sociologists hate B&R because they’re, well, because they’re doing something that’s hard to understand and it looks scientific. Psychologists do not like B&R very much either because it looks as if they’re trying to explain human behaviour without even bothering to refer to human psychology and cognitive processes. Personally I agree with the this 2nd criticism, but I am willing to forgive B&R for it. They weren’t trained as psychologists, and we can’t expect them to be experts on everything. Overall, I recommend B&R though they are very difficult if you are not familiar with biological evolutionary theory. I read this stuff because I am thinking of a question at the moment: to what extent should we consider the internet to be a psychological human thing? To what extent is the internet cognitive stuff? This is the sort of thing that I proposed in my application for the course.

The final book I had a look at was Bainbridge, Introduction to Internet Law. I only managed a skim through, I’m afraid, as this is a monster of a book at 500+ pages. It’s more like a textbook than anything I suppose. It looks reasonably good and not too technical, though there is a disappointing lack of pictures. This will be my main reading for next week.

Computer science and e-democracy no comments

Unlike the previous post, which briefly showed a couple of works on e-democracy from the perspective a political science specialty, this post is going to explore an overview of the entire discipline of computer science, contained in Brookshear’s Computer Science. An Overview (2009). The aim will be to start establishing initial connections to the topic and problems stated in the first post regarding technical issues in direct democracy.

In his overview, Brookshear claims that computer science has algorithms as their main object of study. More precisely, it is focused on the limits of what algorithms can do and what they cannot. Algorithms can perform a wide range of tasks and solve a wide range of problems, but there are other tasks and problems beyond the scope of algorithms. These boundaries are the area within which theoretical computer science operates. Theory of computation can therefore determine what can and what cannot be achieved by computers.

Among other issues, these theoretical considerations may apply directly to the potential problem of electoral fraud: computer science can seek for answers of whether or not algorithms can be created to alter or manipulate electoral results in a given electoral system. By establishing the limits of algorithms, an electoral system that falls beyond algorithmic capabilities can be devised in collaboration with other fields such as political science.

From the structure of the book, it can be implied that computer science also considers social repercussions in every aspect of the study of computers, as every chapter in the book contains a section of social issues that the use and development of computer technologies entails. Ethical implications are present in every step these technologies make, and computer scientists seem to be sensitive to them. Legal and political considerations are not alien to the scope of computer science. Therefore, finding a common ground with other fields such as political science for addressing certain issues in the use of information and communication technologies for a more direct democracy becomes quite achievable aim, as long as there exist a mutual effort to understand the ways in which these two disciplines deal with the problems they encounter, and the methods they use to try to solve them.

The next post will consist of a brief overview of political science, as it is the discipline that will be finally chosen –sociology will be ruled out– for this interdisciplinary essay.

Reference:

Brookshear, J.G. (2009) Computer Science. An Overview. (10th ed.) Boston: Pearson.

Anthropology – approaches and methodologies no comments

Picking up where I left off last week, I will now present the different approaches and methodologies of anthropology as a discipline.

We have already seen that social and cultural anthropology – also known as ethnography – as a discipline endeavours to answer the questions of what is unique about human beings, or how are social groups formed, etc. This clearly overlaps with many other social sciences. For all the authors reviewed, what distinguishes anthropology from other social sciences is not the subject studied, but the discipline’s approach to it. For Peoples and Bailey (2000, pp. 1 and 8), anthropological approach to its subject is threefold:

- Holistic

- Comparative

- Relativistic

The holistic perspective means that ‘no single aspect of human culture can be understood unless its relations to other aspects of the culture are explored’. It means anthropologists are looking for connections between facts or elements, striving to understand parts in the context of the whole.

The comparative approach, for Peoples and Bailey (2000, p. 8) implies that general theories about humans, societies or cultures must be tested comparatively- ie that they are ‘likely to be mistaken unless they take into account the full range of cultural diversity’ (Peoples & Bailey 2000, p. 8).

Finally the relativistic perspective means that for anthropologists no culture is inherently superior or inferior to any other. In other words, anthropologists try not to evaluate the behaviour of members of other cultures by the values and standards of their own. This is a crucial point which can be a great source of debate when studying global issues, such as the topic we will discuss on the global digital divide. And it is why I will spend one more week reviewing literature on anthropology before moving on to the other discipline of management – to see how anthropology is general applied to more global contexts. I will then try to provide a discussion on the issues engendered by the approaches detailed above.

So for Peoples and Bailey these three approaches are what distinguish anthropology from most other social sciences. For Monaghan and Just, the methodology of anthropology is its most distinguishable feature. Indeed they emphasise fieldwork – or ethnography – as what differentiates anthropology from other social sciences (Monaghan & Just 200, pp. 1-2). For them ‘participant observation’ is ‘based on the simple idea that in order to understand what people are up to, it is best to observe them by interacting intimately over a longer period of time’ (2000, p. 13). Interview is therefore the main technique to elicit and record data (Monaghan & Just 2000, p. 23). This methodological discussion is similarly found in Peoples & Bailey and Eriksen (2010, p.4) defines anthropology as ‘the comparative study of cultural and social life. Its most important method is participant observation, which consists in lengthy fieldwork in a specific social setting’. This particular methodology also poses the issues of objectivity, involvement or even advocacy. I will address these next week after further readings on anthropological perspectives in global issues, trying to assess the tensions between the global and particular, the universal and relative and where normative endeavour stand among all these.

References

Eriksen, T. H. (2010) Small Places, Large Issues: An Introduction to Social and Cultural Anthropology 3rd edition, New York: Pluto Press

Monaghan, J. and Just, P. (2000) Social and Cultural Anthropology: A Very Short Introduction, Oxford: Oxford University Press

Peoples, J. and Bailey, G. (2000) Humanity: An Introduction to Cultural Anthropology, 5th ed., Belmont: Wadsworth/Thomson Learning

Economics perspective no comments

How do web-only firms grow to build the digital economy? Which markets do they operate in? How important is the digital economy?

In macroeconomics there are a number of growth theories:

Classical growth theory: This states the view that the growth of real GDP per person is temporary and will return to subsistence level due to a population explosion.

Neoclassical growth theory: This is the proposition that real GDP per person grows because technological change induces saving and investment which makes physical capital grow. Diminishing returns end growth if technological change stops. It breaks with the classical growth theory by arguing that the opportunity cost of parenting will prohibit a rise in population.

New growth theory: This argues that the economy is a perpetual motion machine driven by the profit motive manifest in the competitive search for and deployment of innovation through discoveries and technological knowledge. Discoveries and knowledge are defined as public goods in that no one can be excluded from using them and people can use them without stopping other’s people’s use. It is argued that knowledge capital does not bring diminishing returns, i.e. it is not the case that the more free knowledge you accumulate, the less productivity is generated.

Within the microeconomics view, there are 4 market types: perfect competition in which many firms sell an identical product; monopolistic competition in which a large number of firms compete with slightly different products, leading to differentiation; oligopoly where a small number of firms compete; and monopoly in which one firm produces a unique good or service, e.g. utility suppliers.

It has been estimated by The Boston Consulting Group that the proportion of GDP in 2009 earned by the digital economy was 7.2%[1]. They estimate that it will grow to 10% in 2015. They define the elements captured by GDP as: investment, consumption, exports and Government spending. The total contribution of the internet sector divides into: 60% consumption on consumer e-commerce and consumer spending to access the internet; 40% on Government spending and private investment. They identify elements which contribute indirectly but which are ‘beyond GDP’: e.g. user-generated content, social networks, business to business e-commerce, online advertising, consumer benefits etc.

[1] The Connected Kngdomw: how the internet is transforming the U.K. economy. The Boston Consulting Group, commissioned by Google, 2010.

Complexity Science no comments

Keywords: complexity theory, complex adaptive system theory, general system theory, nonlinear dynamics, self organising, adaptive, chaos, emergent.

For the past couple of weeks I have been trying to get my head around the idea of theories of complexity as applied to systems. What is it ? How is it measured ? how does it – or does it in fact – relate to web science ?

John Cleveland in his book “Complex Adaptive Systems Theory An Introduction to the Basic Theory and Concepts” (published in 1994 revised in 2005), states that complex adaptive systems theory seeks to understand how order emerges in complex, non-linear systems such as galaxies, ecologies, markets, social systems and neural networks.

For an explanation more useful to a layman I was advised to read ‘Complexity – A guided tour’, by Professor Melanie Mitchell (Professor of Computer Science at Portland State University). In 349 pages Melanie Mitchell has tried to give a clear description and explanation of the concepts and methods which may be considered to comprise the field of Complexity Science without the use of complex mathematics.

One problem is that as a recognised discipline, Complexity Science appears to barely 30 years old, though work on some of its aspects go back to the 1930s. Mitchell suggests its beginning as an organized discipline could be dated to the founding of the Santa Fe Institute and its first conference on the economy as an evolving complex system in 1987.

Another problem appears to be that the sciences of complexity should be seen as not singular. In the last chapter of her book Mitchell comments that ‘most (researchers in the field), believe that there is not yet a science of complexity at least not in the usual sense of the word science – complex systems often seem to be a fragmented subject rather than a unified whole.’

Armed with these caveats I started ploughing my way through an interesting and enlightening book. The book is divided into five parts: background theory, life and evolution of computers, computation writ large, network thinking and conclusions – the past and future of the sciences of complexity. By the end of the book I knew more about such things as Hilberts problem, Goedels theorem, Turing machines & uncomputability, the Universal Turing machine and the Halting problem amongst others.

Below is summary of points I found interesting and have spurred further reading.

- Seemingly random behavour can emerge from deterministic systems, with no external source of the randomness.

- The behaviour of some simple, deterministic systems can be impossible to predict, even in principle, in the long term due to sensitive dependence on initial conditions.

- There is some order in chaos, seen in universal properties common to large sets of chaotic systems e.g. period doubling route to chaos and Feigenbaums constant.

- Complex systems are centrally concerned with the communication and processing of information in various forms.

- The following should be considered with regard evolution: Second law of thermodynamics, Darwinian evolution, organisation and adaption, Modern Synthesis (Mendel V’s Darwin), supportive, discrepancies, evolution by jerks, historical contingency.

- When attempting to define and measure complexity of systems Physicist Seth Lloyd 2001 asked how hard is it to decribe ? how hard is it to create ? what is it’s degree of organisation ?

- Complexity has been defined by:

- Size

- Entropy

- Algorithmic information content

- Logical depth

- Thermodynamic depth

- Statistical

- Fractal dimension

- Degree of hierarchy

- Self reproducing machines are claimed as viable e.g. Von Neumans self reproducing automaton, “a machine can reproduce itself”

- Evolutionary computing – genetic algorithms(GA), work done by John Holland, algorithm used to mean what Turing meant by definite procedure, recipre of a GA …. Interaction of different genes.

- Computation writ large and computing with particles – Cellular automata, life and the universe compared to detecting information processing structures in the behaviour of dynamical systems, applied to cellular automata evloved by use of Genetic Algorithms.

The points which seemed to be most relevant to web science and merit further investigation are:

- Genetic algoritms

- Small world networks and scale free networks

- Scale free distribution Vs normal distribution

- Network resilience

The future of complexity – mathematician Steven Strogatz “I think we may be missing the conceptual equivalent of calculus, a way of seeing the consequences of myriad interactions that define a complex system. It could be that this ultra-calculus, if it were handed to us, would be forever beyond human comprehension…”.

Astronomy and Pyschology Vs the Web no comments

Last week I said that I will explore how the web can be seen as a psychological world by which astronomy can be used to see the ‘real’ effects of this world:

In order to find the common ground between Astronomy and Psychology I will first detail the initial research agendas of the 2 disciplines.

Psychology is a science that seeks to understand behavior and mental processes, and to apply that understanding in the service of human welfare. In particular, engineering psychologists study and improve relationships between human beings and machine. With machines such as computers, it is easier to conceptualize the relationship between humans beings and machine – but when applied to the web, the idea of a machine becomes problematic. For the web is not a machine as such, Yes it is constructed and based on servers and browsers among other things, but the web itself is a combination of code that has no physical form as such. As such, cognition, an important aspect of psychology, helps us understand how the web its perceived by its users: these are the ‘mechanisms’ through which people store, receive and otherwise process information. The interaction between people and the web is a somewhat complicated idea because if you ask people what the web looks like, or what shape it is, they will refer to a cognitive map, that is the mental representation of the web. This line of thought leads me to wonder whether the web is physically constructed or cognitively constructed.

It is now necessary to explore the basics of astronomy to see how the idea of a physically constructed web is understood. While it may seem obvious to someone that the web is virtual, I hope that after reading the following, they will be somewhat enlightened.

Astronomers consider the entire universe as a their subject. They derive the properties of celestial objects and from those properties deduce laws by which the universe operates. Astronomers have been using observation to understand how the universe works. All of their knowledge comes from the light which is received on earth. From this light, Newton was able to invoke 3 laws which are the basis of mechanics. Briefly stating these laws:

1) A body will remain at a constant velocity unless acted on by an external force.

2) Force = Mass x Acceleration.

3) every action has an equal and opposite reaction.

When these laws are applied to celestial objects, Newton’s law of universal gravitation is the basis of celestial mechanics. According to this: “every particle of matter in the universe attracts every other particle of matter with a force directly proportional to the product of the masses and inversely proportional to the square of the distance between them”. Importantly the mass of an object reflects the amount of matter it contains. While this idea is easy to understand when objects have physical properties, it is somewhat inconsistent with cognitively constructed objects. However in physics there is such a thing as a point particle – this is any object which has zero-mass, zero-structural dimension and no other properties. The point is that something can exist even if it doesn’t have the conventional properties of a physically constructed object, it is just harder to conceptualize.

This is where the divide between psychology and astronomy start to blur. Okay so every particle of matter in the universe attracts every other based on mass. Since there is no mass in the ‘cognitively constructed web’, would the equivalent be information itself???? Would it be possible to say that ‘every particle of matter in the virtual web attracts every other particle of matter with a force directly proportional to the product of the information’? But what do I mean by particle? is this information at a semantic level? Could databases such as wikipedia be compared to planets??? These questions will be explored in following weeks.

For now, I want to focus on Newton’s three laws and the idea of informational attraction.

Could these three laws:

1) A body will remain at a constant velocity unless acted on by an external force.

2) Force = Mass x Acceleration.

3) every action has an equal and opposite reaction.

be used to explain:

1) the expansion of the dark web?

2) With the Arab Springs in mind: the force that a political/social uprising has = the amount of information x the acceleration (acceleration is the speed at which this information is being cognitively assimilated by individuals) [important to note here is the idea that force is a vector quantity – that means it has direction.]

3) every action in the virtual world has an equal and unidirectional reaction in the real world. (E-commerce is a sample example of the reciprocal nature on the internet)

Depending on how well I have expressed myself here, it may be possible to understand that the Web is cognitively constructed but does abide, in some way, by the basic laws of celestial mechanics. One consequence of combining psychology and astronomy is the dichotomy between subjectivity and objectivity. In this sense, astronomy is a holistic study, which consists of the total system of the universe. Arguably astronomy ‘acquaints man with his immediate surroundings as well as with what is going on in regions far removed.’ But it is important to understand that one man’s surrounding may differ from another man’s. One of the problems associated with astronomy is the problem of subjectivity – ‘one of the drawbacks of making astronomical observations by eye with or without the advantage of supplementary equipment is that they are subjective’. Astronomers try to get rid of the subject because they want to invoke laws to describe the observed with phenomena. These laws must be generalized and be applicable to any subject, thus the subject is removed. For psychology though, the subject is of paramount importance.

Next week I hope to further my explanation of the web as a cognitively constructed point mass which funnily enough shows some abidance to the laws of celestial mechanics. One prevailing idea will be whether I can deduce an Information Spectrum – similar in idea to the electromagnetic spectrum. After all, Astronomers constructed this spectrum by observing radiation they observed – equally can web scientists construct a spectrum by observing the information (sorry i hate to use such a vague word) they observe.

EWII: Philosophy and Law no comments

This week I read 2 books: Greg Latowka, Virtual Justice: The New Laws of Online Worlds, and David Berry, Copy, Rip, Burn: The Politics of Copyleft and Open Source.

Greg Latowka, Virtual Justice: The New Laws of Online Worlds

This is an absolutely terrific book that I would recommend to anybody. I am particularly interested in it because it speaks to the law aspect of the internet, which I am working on for this module. Lastowka is this young hot-shot professor of law at Rutgers University, the same place Dan Dennett works at. He is a very good writer and he spends most of his time looking at real-life or imaginary case studies and then discussing the implications/interpretations from a legal, jurisprudential or moral point of view. I’ve never studied law before, but from this book I get a real sense that much of law in general, but in particular new areas of legislative law, work mostly by precedent. By this I mean that if there’s no law about this or that thing, then it basically hangs on what some judge has to say about it. And judges are human – the judge might be in a bad mood one day and decides that someone broke the law, even if there is no strictly codifed grounds to ratify this. Some of Latowka’s real life case studies are fascinating. For example, he talks about the Habbo Hotel hacker, the scam artist who made 1000’s of dollars in real money by “stealing” virtual furniture and other online second life belongings on the site EVE online.

David Berry, Copy, Rip, Burn: The Politics of Copyleft and Open Source

I’m not quite finished this yet but this is a very good book for general reading which I recommend. It’s not too old (2008), and impressively research. Berry is a lecturer at Swansea in Media and Communication. The book is about what he calls FLOSS free/libre and open source software. He spends a lot of time talking about the difference between the free software movement and the open source movement. To be honest I am not entirely convinced that these definitely are different in the definitive way that he says. I get the impression that most people within these movements have in the past considered them to be separate, but that does not necessarily it has always been the case or that it is the case now. Like most historical arguments, it is probably subject to a little bit of doubt. In any case, it seems that the main difference between open source people and free software people is a question of philosophy: is it an issue of moral principle or is it an issue of pragmatism. Free software people like Richard Stallmann, the prophet who founded it, think that there is a moral high ground at stake. Open source people like Linus, who created the early versions of Linux, are more pragmatic. Linus believes that code should be freely available because it results in better programs. But programmers need to live – you can’t expect them to work for free or else we won’t get anywhere. To day, Linus has made a lot of money through working on projects related to Linux, even though it is still free. There are many large companies including IBM who have worked with Linus on or around Linux. I’m not sure to what degree these differences are really important. Stallmann they are very important. However all these FLOSS ideas have a lot in common – a focus on the collective good. A famous paper by John Perry Barlow (1996) A Declaration of the Independence of Cyberspace to some extent captures the emphasis of both sides, though Berry thinks it is closer to the free software position.

Hacktivism: Information (over)governance and state protection no comments

Although my initial plan was to research solely e-mail hacking from a political perspective, and it appears that there a number of different cases on politics and e-mail hacking, Mitt Romney and Neil Stock, I believe it would be beneficial to open the subject out slightly to communications hacking (again). This allows me to review more cases in a wider areas of hacking channels and head towards an analysis of the political intent for communications hacking, rather than focusing on the specifics of the e-mail hacking cases.

After continuing my research it appears that there is a political sector dedicated to hacking of communications. This is called hacktivism. This word is a portmanteau of two words; hack: “the process of reconfiguring or reprogramming a system to do things that its inventor never intended” (BBC News, 2010) and Activist: an individual who is involved with achieving political goals. Hacktivism appears as a means for political personnel to seek retribution using computers and/or technological devices as a vehicle to perform such actions. This insinuates that hacktivism is an action that is surrounded by negative connotations involved with ‘damaging the opposition’.

However, a BBC News Story entitled: Activists turn ‘hacktivists’ on the web (BBC News, 2010, link) notes how a hacktivist body such as the Chaos Computer Club is not intent on causing chaos amongst their opposition or in society, they are an organisation that is built for defining and analysing ‘holes’ in security systems on the web to maintain an optimal level of security for such systems involved with government, national security and emergency services. This helps to protect the identity and reputation of the state, and its political counterparts, but potentially bias the system against a democracy and an ideology of the freedom of information.

Other hacktivist groups such as Anonymous and the Electronic Frontiers Foundation (EFF) have an alternative ideology in which they ‘fight’ for. These groups push for the freedom of information against the ‘corporate blockades’ (Ball, 2012) on the web, which are said to blind the population, of any given nation, from seeing the truths about our political leaders and their encompassing political parties. It is believed that, as we are going from a restriction of information to an abundance, society should reserve the right to view information concerning those that govern our lives.

Although it is a valid point to seek truths about our ‘leaders’, one may argue that the freedom of information is a subject that would be fraught with corruption and abuse, especially in a political sense. Information that is to be available to all citizens, including those that counter our political systems and ideologies, may be vulnerable to attacks.

In light of this, a debate around hacktivism is due for establishment in my next blog about political parties and communications hacking.

Slowed Progress But Marxism no comments

This week’s reading was somewhat disappointing. I had intended to get through more content however I found that much of what I was reading required a much deeper level of analysis to understand. For this reason, rather than exploring social networks or globalisation; I have focused more heavily on sociology and in particular one of the most famous thinkers to have influence the field: Karl Marx.

Both for Politics and for Sociology, Marx is held in very high regard. Despite how authors feel about the validity of Marx’s views; it is quite clear that most, if not all, commenter’s extend a degree of respect for the man regarding him as a thought leader both in his own time and beyond. Whilst I would have preferred a broader week of reading, the fact that Marx and Marxist theory exists so prominently both in sociology and politics I did not begrudge the topic the extra time I afforded it. I consulted two texts in particular.

Marx, Marginalism and Modern Society

This book offered a good introduction to Marx as a whole, in terms of both his contribution to politics and sociology. The thrust of the argument presented in this text is that Marx’s key contribution was his critique of the political economy. The author presents the case that whilst this was recognised to varying extents in politics and economics; sociological perspectives took longer to entwine themselves with Marxist viewpoints.

On reason for the eventually large scale adoption of Marxist theory within sociology is suggested to pertain to Marx’s views on materialism, in particular; Historical Materialism. The perspective argued that whilst history might have previously separated notions of personhood from thingness, history rather required a deeper account of interactions. For example, dissecting the “things” called institutions into the individual “people” they were made of. This view offers significant importance for sociology allowing far deeper consideration of the people that were previously amorphous entities. Many comparisons can be drawn between these notions and social networking. Not least because of the changing relationships such sites have had with their user bases over time but also at the level of individual users with the structural changes from simple lists of activity to Facebook’s features like “Timeline”. These most certainly can be argued to personalise “events” allowing them to become related much more closely to the individual they are associated with.

Classical Sociology

This book provided a good logical point of development for explaining the development of Marxist sociological theory. In particular it dealt with the ways in which Marxist theory has been modified or adjusted in what has been argued is a necessary process of modernisation.

This notion of modernisation does not reflect technological or social advancement explicitly but rather the sociological ideas about “modernity”. As before, this is essentially the view that different cultures/societies have modernised differently leading to “multiple modernities”. The author highlights that sociologists like Anthony Giddens have argued that modernity changes the social structure and as such requires a post-modern sociology. The means that only theories that account for such changes, only post-modern theories, are sometimes argued to be the only theories relevant to assessments of the modern world. This text’s author however, believes that Marxism exploits a loophole in this argument by way of the additional work done by one Max Weber.

The author argues that Max Weber’s neo-marxism, in particular the addition of Nietzsche’s perspectivism, is the key to incorporating Marxist theory into discussions of “modern” society. Perspectivism is the theory that the acquisition of knowledge is inevitably limited by the perspective from which it is viewed. This is infact a common view within sociology and has significant relevance to the nature of accounts of social networks. Does a persons experience of myspace or facebook vary if they are a “user”, a “business”, a “celebrity”, a “moderator”, a “site owner” and so on. When considered alongside political perspectives this is of course still deeply relevant. The nature of both a person’s position/perspective, the role that position/perspective implies and the power (or lack of power) that it entails all contribute the nature of the interactions they will experience.

For my reading this coming week it is my intention to focus on texts relating to social media. In particular The Network Society.