Southampton Goggles

The inaugural activity for the newly formed Web and Internet Science (WAIS) research group, the WAISFest, was a 3-day long hybrid between a hackday-style event and an unconference. During the fest, Jon and Sina ran an introductory tutorial on Map-Reduce processing with Hadoop, and together with some extra person-power, our multimedia team hacked together a prototype system called “Southampton Goggles”, which is the subject of this post.

The idea behind Southampton Goggles was to develop a vision-based recognition system a bit like Google Goggles, but with data specific to the Southampton University campus. Specifically, we wanted to investigate whether it would be possible to create a system that could work with images from the Southampton DejaView life-logging device and provide near real-time feedback to the Android handset.

Historically, we have a lot of experience in building mobile object recognition systems which we are able to leverage; in particular we pioneered Goggles style recognition for art-images (2004) and maps (2006). With our newly released ImageTerrier software, making such a system is easier than ever.

Before the fest started we had already created a small corpus of annotated images of the main Southampton campus by crawling geo-tagged images from Flickr. These images were indexed using ImageTerrier, and Heather had spent some time developing a semantic tag-recommendation system that would associate suggested URIs from Southampton open data with query images based on the geo-location and Flickr tags of the top-matching images. During the fest we wanted to explore how we could create a much denser campus dataset by developing a multi-camera capture system (like the Google Streetview camera system, but much, much smaller) that could be carried around the campus, and even go inside buildings. We also set out to develop an annotation tool that would allow the captured images to be annotated through crowd-sourcing.

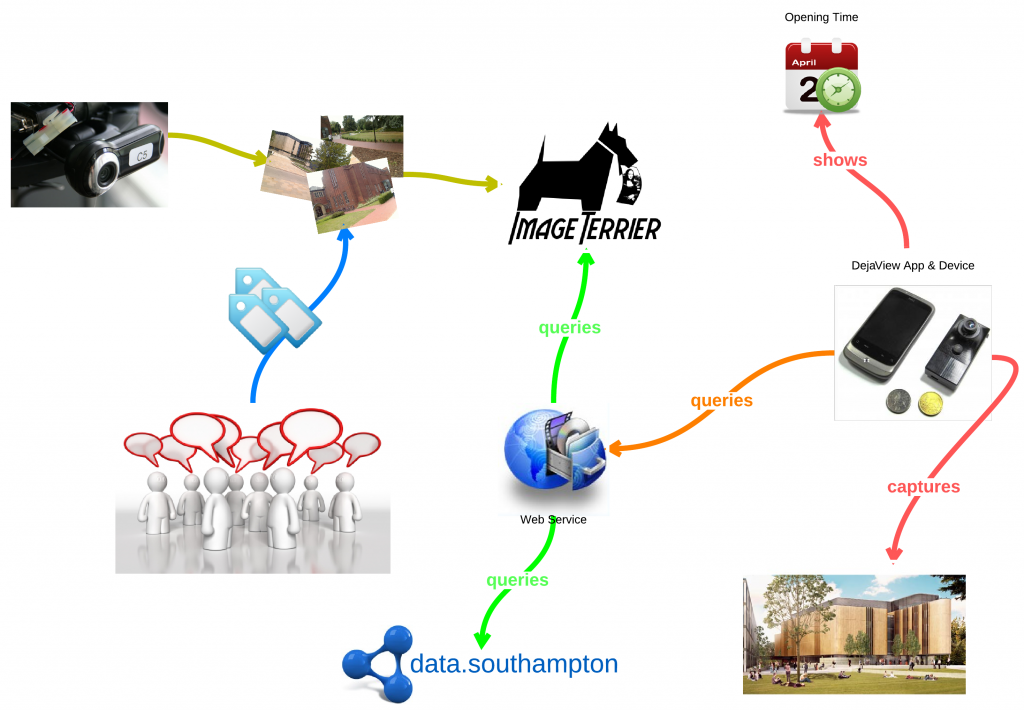

The workflow of all the planned fest tasks is nicely illustrated in the diagram below. Briefly the diagram shows how the capture solution will be used to collect images, which are then annotated with linked-data through crowd-sourcing. An ImageTerrier index is built with these images to augment the existing Flickr set, and a web-service is deployed that allows image queries to return URIs to relevant objects on the campus. A mobile phone or DejaView device can query the web-service with images it captures, and with the response URIs the device can display relevant information about the query image.

In the remainder of the post we’ll describe technical details of each of the components of the Southampton Goggles system. At the end you’ll find a copy of the presentation we gave to the WAIS group at the end of the fest.

Data capture

In order to capture lots of image data from which to build an index, we developed our own streetview style camera system with inspiration from this IEEE Spectrum article. Our camera system, dubbed “CampusView”, consisted of 6 high resolution webcams, a GPS device and a digital compass. The software to drive the capture rig was written in Java using our OpenIMAJ libraries during the course of an afternoon! More details of the hardware construction and software is given below.

Hardware

Our hardware for building the camera rig consisted of the following items:

- 6 x Logitech Webcam Pro 9000 for Business

- 1 x GlobalSat BU-353 USB GPS receiver

- 1 x OceanServer OS5000 Digital Compass (we used the serial-only version)

- 1 x Keyspan USA-19HS USB-Serial converter for the compass

- 2 x USB 2.0 Hubs with cables

- 1 x 278mm dia plastic disk to mount the cameras (we used the end of an old cable reel)

- 1 x 2m ranging pole (we borrowed a nice carbon fibre one) to mount the disk

- 1 x piece of metal to mount the disk to the pole (we cut up an old piece of rack rail; channel section about 150mm long, 40mm wide, 6mm deep)

- 2 x M5 nuts and bolts to fix the metal channel section to the disk

- Assorted cable ties to hold everything together.

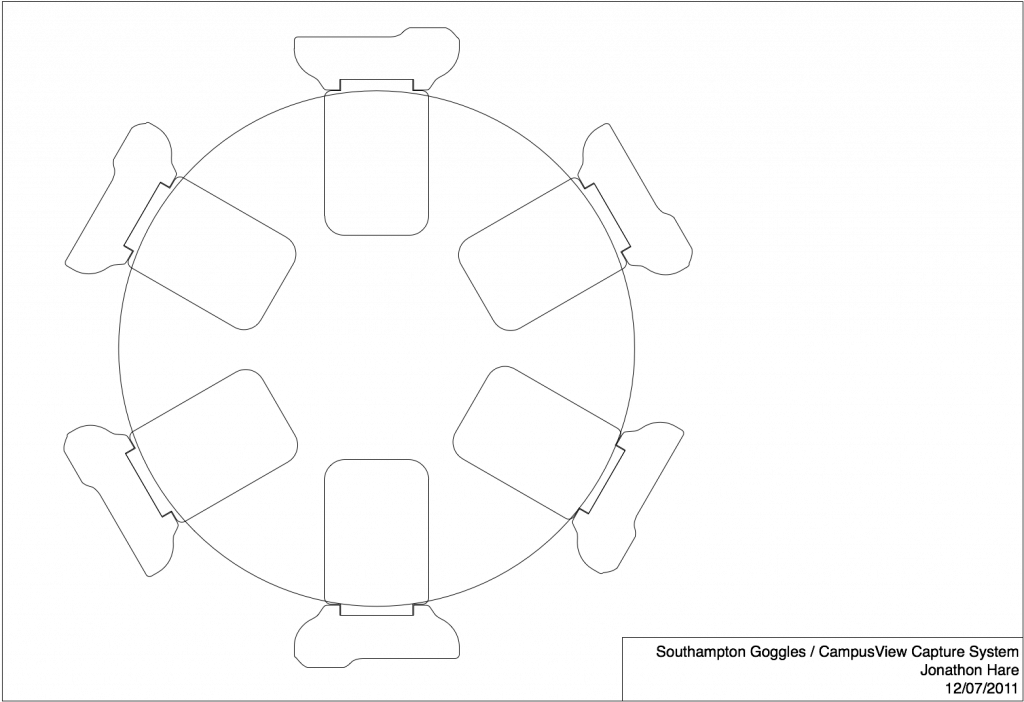

The plan for mounting the cameras is show here. It should be noted that with our mounting arrangement there is very little overlap in the images captured by the rig; an extra camera of two would be useful if we wanted to stitch the images into a panorama. For the purposes of building an index, this isn’t so much of a problem.

In total we spent around £300 on the cameras, hubs and GPS. The ranging pole was borrowed (around £200 to buy), and the digital compass has previously been used for an undergraduate student project (US$299 to buy). Here are some pictures that show how the whole rig is assembled.

In addition to the camera rig on the pole, we also made a laptop harness by cutting up an old conference bag (this one was from ICME 2006 in Toronto!) and making some additions with velcro to secure the laptop.

With the current arrangement, two people are required to operate the rig; one to carry the cameras, and another to carry/operate the laptop.

Software

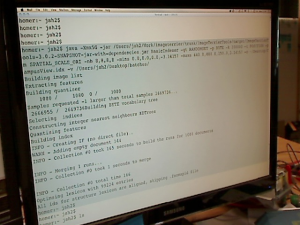

As mentioned previously, the software to control the capture rig was written in Java using our OpenIMAJ libraries. Dave wrote a nice serial port wrapper library and GPS interface library (works with all GPS units using the standard NMEA protocol). Jon and Dave wrote an interface library for the digital compass which utilises the serial library. All three of these libraries have been added to the OpenIMAJ source, under the hardware directory.

In order to operate multiple webcams at high resolution, we had to make a small tweak to the OpenIMAJ core-video-capture library to allow frame-rate hints to be passed to the native layer (see this post for information about the video capture library). On a Macbook Pro laptop (three cameras per USB bus) we were able to capture from all six cameras at 320×240 resolution at a rate of 30 FPS, however, to capture at a higher resolution the frame-rate needed to be dropped considerably. This is just an effect of the limited bandwidth of the USB bus. The frame-rate hint additions to the library are currently in the OpenIMAJ SVN trunk, and will be part of the next release.

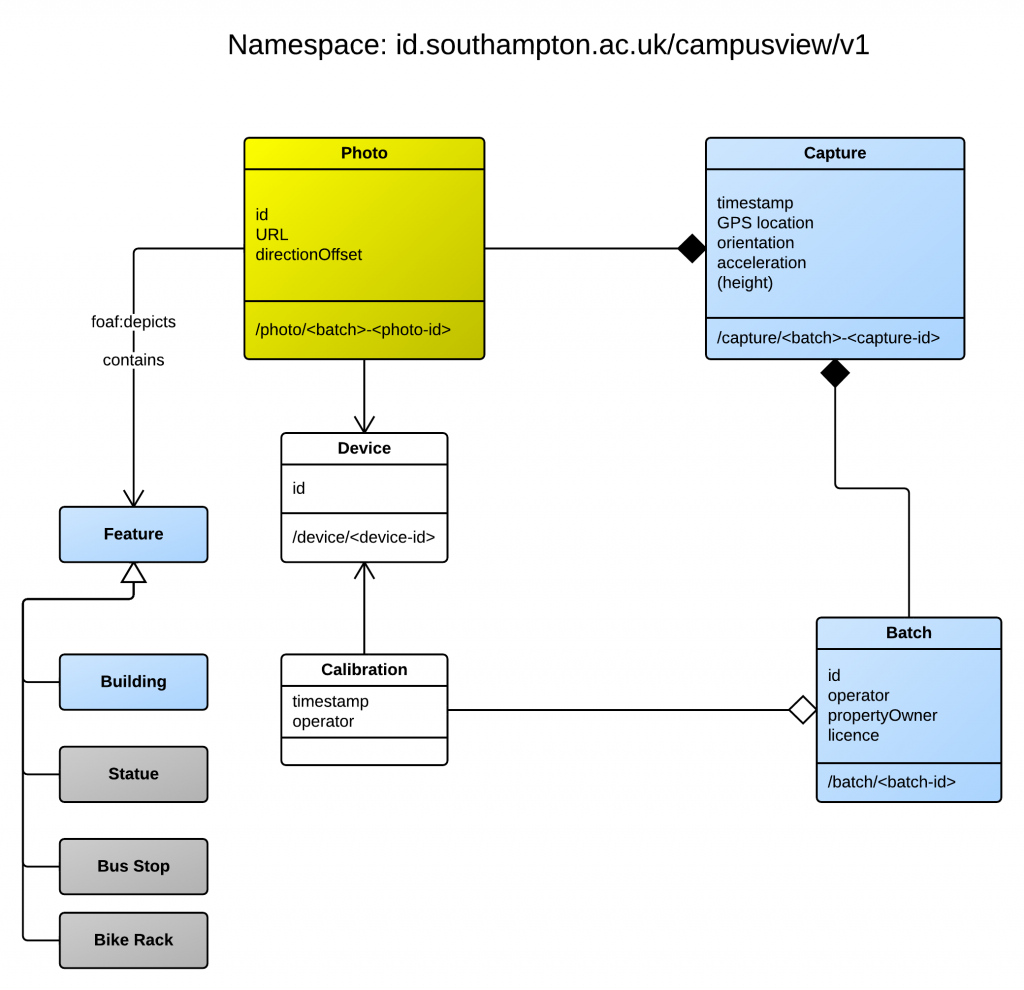

To build the “CampusView” software application itself, we first undertook some brainstorming to determine what we needed the application to record and in what format it should be. The result of the brainstorming was this diagram that defines both what the CampusView tool needs to capture, as well as the model for the annotation software described later.

With respect to the CampusView application, the basic model has the following parts:

- Photo – this is an individual image captured from a single camera. We just save a PNG file to a directory (identified by a capture identifier), numbered with the camera identifier.

- Capture – this is a set of six images (one per camera) all taken at the same moment. The images are saved to a directory named with the capture identifier (numeric, starting from 0 and incremented for each capture). The metadata (compass, gps, etc) is written as a line to a CSV file which holds all the captures in the current batch

- Batch – a set of captures grabbed during a continuous time period. The application creates a directory for a batch and stores all the captures within it. A CSV file for all batches is also created that holds the metadata for each batch on a single line.

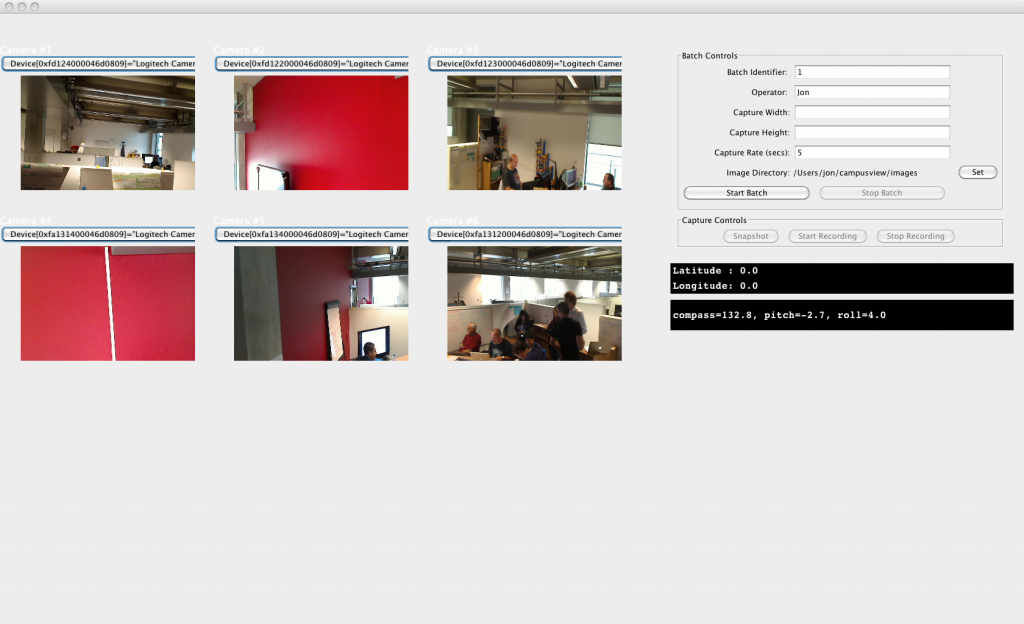

The following image shows a screenshot of the CampusView application. There are controls for configuring the cameras (our cameras were numbered on the rig in a clockwise pattern; in the software the cameras had to be configured in the same order), controls for configuring batches, and a set of buttons for either manually taking a snapshot with all the cameras, of running in an automated mode where captures were taken every 5 seconds.

If you’re interested in looking at the software, we’ve made the source available in the demos directory of the OpenIMAJ source. With the software written, we were able to go out and capture some sample images around the campus. Our testing of the device went remarkably well. We found that we were able to capture continuously (in automatic mode) whilst walking at a normal pace. The only real problems encountered were due to the height of the pole meaning that sometimes the camera rig was in a tree, and the occasional snagging and disconnection of the USB cables (due to the aforementioned trees, and also to the camera and laptop operators walking off in slightly different directions!) which caused the software to stop recording properly. Powering the whole rig from a single laptop did cause considerable battery drain, however we estimate that we would still be able to operate the rig continuously for over an hour and a half without needing to stop and recharge.

Sample Data

Here are a couple of captures taken with the camera rig and the software. For these images, we limited the camera resolution to 640×480 pixels, although we could go to a much higher resolution.

Annotation Software

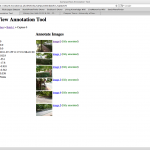

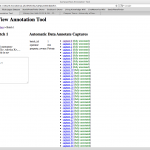

Some screenshots of the annotation software developed for the project are shown below. The annotation software imports the data recorded by the CampusView system and presents a web-based user interface that allows users to annotate each image, as well as view the metadata.

Within a couple of hours the team were able to annotate a total of 1080 images captured with CampusView. Each image was annotated with the information about which university buildings could be seen in the images. The annotation software allows the annotations to be exported as RDF, linked to the Southampton Open Linked Data service.

The ImageTerrier index and web-service

Using ImageTerrier we indexed the images we captured using quantised difference-of-Gaussian SIFT features. This index was imported into an ImageTerrierWeb instance which exposes querying functionality over the index as a restful web service. ImageTerrierWeb is included with the ImageTerrier software.

Android/DejaView software

The existing DejaView Android application sends each image taken by the DejaView device to a simple gateway web-service. The gateway service then forwards the image to other services (for example a face recognition service), collates the responses and sends a single reply back to the device. We modified the gateway service to also call our ImageTerrierWeb instance.

The existing DejaView Android application sends each image taken by the DejaView device to a simple gateway web-service. The gateway service then forwards the image to other services (for example a face recognition service), collates the responses and sends a single reply back to the device. We modified the gateway service to also call our ImageTerrierWeb instance.

We then modified the DejaView Android application so that it would display a notification to the user about any buildings that were detected. If the user clicked the notification, they would be presented with information about the particular building.

Out and about testing

With all the software in place and data collected, we were finally able to test the whole concept. We’ll do a proper evaluation at some point, however, our limited testing demonstrated that everything was working as planned.

The Final Presentation

These are the slides that Jon presented to the WAIS group at the end of the fest to demonstrate what had been achieved by the group in a relatively short space of time (the fest itself lasted 3 days; Jon, Dave and Sina spent an extra couple of days beforehand making preparations and partially constructing the CampusView hardware).